How to drive real business impact by mastering ad hoc analysis

Over the past decade, data teams have grown, data stacks have gotten more complex, and business cultures that prioritize data have been defined and embraced. But amidst all this growth and investment, one of the most important parts of data analysis has been neglected: ad hoc analysis.

When Business Intelligence platforms became prominent ten years ago, there was much less data available, and business stakeholders had much less access to it – or desire for it. Since then, three things have changed. Most obviously, the raw amount of data that companies collect has multiplied – the modern data stack was born from the rising need for more complex data architectures that even small companies now have.

But in addition to raw data volume, the degree of stakeholder access and the rate and complexity of questions have both risen, stretching BI platforms— and data teams— thin.

The modern data stack has changed the game in many ways, but the always-just-out-of-reach ideal of full, self-service analytics still seems unlikely to be achievable any time soon. And with so much investment and focus on BI and self-serve data democratization, many orgs have lost sight of ad hoc analysis – which is actually a data team’s best outlet for driving and demonstrating business impact.

Ad hoc analysis is low-hanging fruit, and when data analysts get better at it, they develop a wealth of new ways to help their businesses ask deeper questions and get better answers more quickly. Ad hoc analysis is potent precisely because it’s not a new-fangled methodology you have to learn and integrate; you’re doing it already, and the requests to keep doing it are inevitable. Learning to do it better will pay immediate dividends and lead to a much stronger data culture.

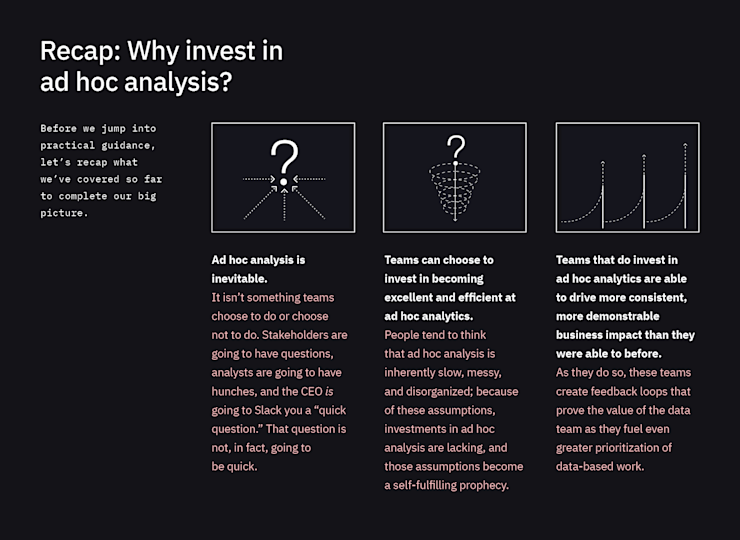

There are three main reasons for investing in your ad hoc analysis skills:

01. All teams frequently do ad hoc analysis. If you do it more effectively, you can create much more value over time.

02. Almost all teams regard ad hoc analysis as frustrating and slow. If you can do it more efficiently, you can outpace teams that don’t do it as well.

03. Ad hoc analysis is what actually drives decision-making. If you can do it better, you can have real business impact and, at the same time, demonstrate that impact more clearly.

Why ad hoc analysis?

“Why ad hoc analysis?” feels like an obvious place to start this guide, but it’s really not a question any data team has had to face. The truth is that every organization does ad hoc analysis – inevitably and without exception. Your coworkers will ask you questions, and most of the time, the answer will require a quick ad hoc exploration.

So instead of answering “Why ad hoc analysis,” let’s instead explain why organizations neglect ad hoc analysis and why data teams are reluctant to give it the attention it deserves.

Why is demonstrating ROI still so hard?

In the quest to be data-driven, companies are collecting and storing more data and spending more money than ever on data tools and infrastructure. Data teams are growing in parallel with these tooling needs, but often the results of these investments lag, if they show up at all.

The reality is that many companies still struggle to demonstrate the impact and value of all that data. They struggle to measure ROI both quantitatively (“Can we point to clear examples of data projects that moved the needle?”) and qualitatively (“Do we feel data-driven?”).

If you ask data teams why they have trouble showing clear impact, the problem almost always boils down to one thing: Too many important decisions are still being made without data – even though many more people claim to care about backing their decisions with data.

For years, the solution has been to treat this as a pipeline problem. Data teams built dashboard after dashboard to help monitor metrics they thought were important. Data scientists finessed predictive data models that could help stakeholders make decisions informed by precedent.

But real decision-making almost always requires deeper insights on faster timelines than either dashboards (shallow) or predictive models (slow) can support. This means ad hoc analysis! But for most data teams, ad hoc analysis becomes a chore that feels inefficient and messy. They feel frustrated by requests that take them away from their “real work,” and, in the end, often take too long to do the ad hoc explorations that would provide real benefits.

In orgs that aren’t able to do fast, efficient ad hoc analysis, people make decisions without data. They’re unable to find a quick answer in their BI tool and unwilling to wait many cycles for the data team to dig into their question, so they just go with their gut. Over time as more and more decisions are made without data, people stop asking as many questions, and the ROI of the data team becomes very difficult to show.

Why do organizations and data teams neglect ad hoc analytics?

A natural question emerges: If ad hoc analysis is so important, why do so many organizations and teams neglect it!?

To answer this, let’s pull back and think through the three main types of data projects companies tend to work on. These are broad categories with big generalizations, but they’re directionally accurate.

Dashboards Dashboards answer, “What happened?” Dashboards, static tools, and self-serve reports let people keep track of important business metrics over time. They allow people to keep an eye on key indicators as they change and, potentially, shift their decisions to align with new data.

Data science Data science (usually) answers, “What’s going to happen next?” or “What should we do?” At most companies, the bulk of data science work focuses on building predictive models that allow leaders to forecast important activities and metrics.

Ad hoc analysis Ad hoc analysis answers, “Why did that happen?” Unlike the other two categories, ad hoc analyses dive deep into specific questions to uncover deeper insights and figure out what’s really going on.

Many people assume that being data-driven comes down to asking and answering the first two questions. What happened? What’s going to happen next? It makes logical sense. The third question lags behind because of some quirk of the human psyche, and without systematization, ad hoc work inevitably becomes marginal to dashboards and data science.

Everyone knows that some ad hoc work has to happen, and this ironically ensures that ad hoc work is put to the side. Worse, if you actually ask data teams about ad hoc analysis, they tend to support this pattern by acknowledging the presence of ad hoc work while diminishing its potential.

“Yeah, we do a lot of ad hoc work across Google Sheets, scattered .csv files, Jupyter notebooks, SQL runners, and text documents,” they might say. “But it’s just a messy, complicated, slow process. That’s just how it is.”

We hear this all the time. As requests come in, data teams can feel like restaurant servers, running back and forth from the table to the kitchen— but imagine the path between the two is some kind of labyrinth with monsters and booby traps. It’s no wonder, then, that data teams don’t enjoy doing ad hoc work, and don’t invest in it.

The central problem is that data teams and companies alike tend to assume ad hoc work is messy and tiresome by nature. The messiness makes it unattractive, so no one invests in it, which ensures it remains messy.

But as we established above, lack of rigor in ad hoc analysis is more detrimental than most teams realize. These teams struggle to answer deep, complex questions about their business in a timely, impactful manner, and struggle to demonstrate their ROI.

OK, enough preaching. Let’s dive into the specifics. Some of this can feel basic, but it’s important to rebuild our understanding of ad hoc analysis from the ground up.

What is ad hoc analysis, really?

Ad hoc analysis is the art of investigating specific one-off questions and answering them with data. To be good at ad hoc analysis is to have the ability to analyze your company’s data to find the answer to a single, immediate question.

Here’s a simple example:

Allie runs marketing. One day, she notices conversion rates for web traffic skyrocketing. Her first question: “Why is conversion suddenly so amazing?!”

She asks the data team, and they look into it. Soon after, they discover major volume increases from a specific ad keyword that had always done well on conversion, but never had much search volume. Allie shifts ad spend toward that keyword and adjacent, trending keywords and improves cost-per-acquisition by 150% for the month.

This is the general pattern of ad hoc analyses:

01. Something has happened and stakeholders intuit the importance of the surrounding events.

02. Suddenly, stakeholders have to know the answer to very specific questions they may have never been asked before.

03. The success of the answer then depends as much on the data team’s speed in answering it as on the quality of the answer itself.

In the short term, people like Allie achieve these kinds of small but important wins. In the long-term, the company as a whole stacks small wins into big victories and, over time, learns to trust the data team and see the ROI of thinking with data.

Ad hoc analysis is usually about “why”

Dashboards tell you what happened, and data science, broadly, tells you what will happen next. How many widgets did we sell last week? Did we sell more than the week before? How many widgets will we sell next week?

Ad hoc analyses generally tell you why something happened. And deeply understanding why something happened is often much more valuable than looking at the history of an event or attempting to predict its future.

Take our scenario: A dashboard told Allie what happened (conversion went up), and ad hoc analysis told her what really happened (a specific keyword spiked unexpectedly in volume and lifted overall conversion). The “really” in this question is where the rubber meets the road. The other questions can provide context, but why is the really important question.

Efficient exploration is key

Ad hoc investigations often emerge from hunches, observations, and instincts. Sometimes, you go down the rabbit hole and discover an amazing new truth about your business — and sometimes you don’t discover anything at all.

It would be totally plausible for our conversion scenario, for example, to have uncovered a non-actionable result. Maybe there’s nothing specific, and conversion just happens to have increased across the board. Maybe it turns out the data team doesn’t even have the data to answer this question. The failure rate, however, doesn’t mean ad hoc analysis isn’t worthwhile; it means learning to timebox, work efficiently, and quickly validate hypotheses are crucial skills to hone.

Ad hoc excellence is cultural

As data teams practice ad hoc analysis, they get better at investigating, and others learn to trust and communicate their data hunches more often. If you look back at our example, you can see three places where data worked as a cultural value.

First, Allie noticed the conversion anomaly in her high-level static reports. A default Google Analytics dashboard could have been plenty to make this noticeable.

Second, Allie asked a question and, even more importantly, asked why. Some teams might have just reported on the win – imagine “Conversion is up huge this week!” posted to every Slack channel – and then been silently disappointed when it dropped the following week.

Third, the data team responded by listening to Allie’s question, understanding that the impact of the answer depended on speed, and providing an answer in a timely fashion and an actionable format.

Of course, having the right tools is essential (we’ll cover tooling later), but no tool can help you choose the right questions. The most important changes that will help your team drive more business value with ad hoc analysis result from culture and process.

When ad hoc analysis becomes fun and efficient, your data team will never lack for opportunities to drive obvious business impact. Your challenge will be keeping up and prioritizing!

For a long time, data teams felt they couldn’t do all the analysis work they wanted to do because they were mired in plumbing work. But over the past decade, data teams have rebuilt their tooling (so much so that it’s now often called the “modern data stack” to differentiate from what came before). Now, data teams have pipelines, observability, infinitely scalable data warehouses, and more.

Many data teams, however, still feel that something is missing: They’re just parsing data instead of generating insights, or sending screenshots instead of sharing analysis. Information is emerging – sometimes in a trickle, sometimes in a geyser – but much of it is going to waste.

Ad hoc excellence is the missing piece.

In the following sections, we’ll take a high-level view and zoom in further and further on the work of ad hoc analysis.

We’ll start with the lifecycle of a great ad hoc analysis and zoom into the systems and frameworks that provide ad hoc work with structure, dig into the tools and workflows that make ad hoc analysis possible, and land on describing how and when to make ad hoc work evergreen.

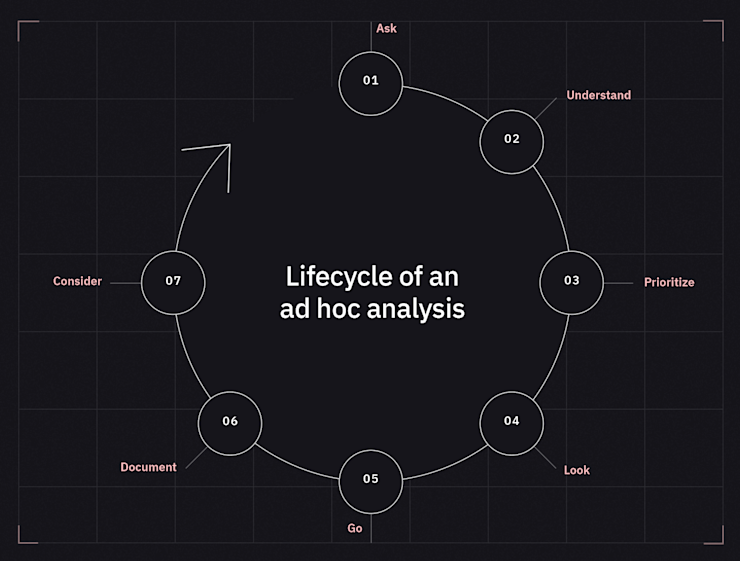

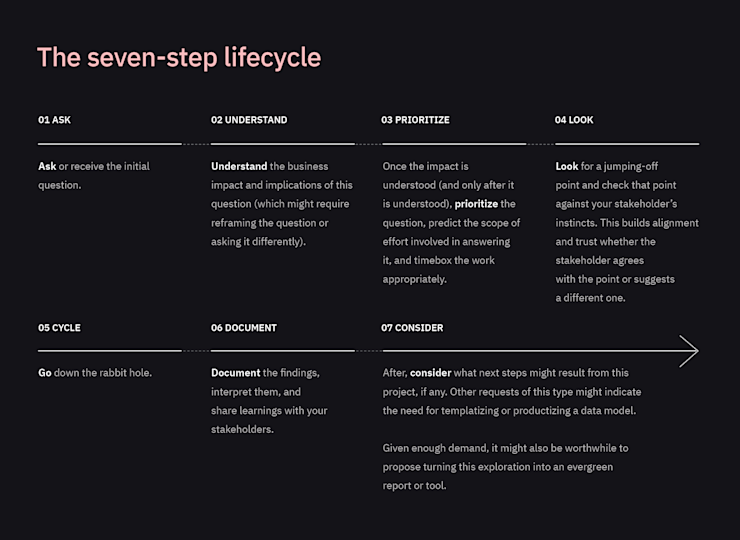

The lifecycle of ad hoc analysis is simple and unassuming, but once you couple it with the systems and frameworks that follow, the overall methodology becomes a powerful way of keeping even the most complicated exploration organized and efficient.

This lifecycle works as a to-do list in two senses: When you do ad hoc analysis, these are the steps you’ll follow, but also, when you examine the effectiveness of your ad hoc analysis processes, this list is how you’ll see which components most need improvement.

None of this is groundbreaking, but the results of this high-level perspective very well can be. With a clear blueprint for the lifecycle of an ad hoc analysis, you can eventually take a project from random Excel hackery to a polished, impactful report.

The lifecycle of a great ad hoc analysis

Of course, even though each step is important, they don’t require equal amounts of work. The bulk of ad hoc work lies in going down the rabbit hole (step 5), which is inherently difficult for data teams to predict and even more difficult, if not impossible, for outsiders to advise on.

The centrality of this work, however, makes thinking clearly and carefully about the bookends on either side of this work even more important. It doesn’t actually take much time to reframe a question or contextualize it in terms of expected business impact. It also doesn’t take too much time to present your findings neatly or translate your scratch work into a more compelling format.

But in both cases, these relatively small efforts can pay long-term dividends and save you from having to do duplicative work later on. Like a sprinter, the bulk of the work might be in running the race, but the success of the sprint can suffer badly from the wrong shoes or the wrong training.

Maturing your lifecycle

We’ve worked with hundreds of data teams and often, their ad hoc lifecycle tends to be vaguer than what we’ve outlined, if they have one defined at all.

For many data teams, the initial question flows from a stakeholder to a person they happen to know in the data team or, a little better, a centralized email address or Slack channel. Some orgs that are a little more organized use a task management tool (Linear, Jira, etc.) here. The data team skips step two (Understand) either by trusting the stakeholder too much and taking their question literally, or trusting them too little and not looking for the insight contained in an imperfect question.

A key failure mode at this point is to prioritize based on limited contextual information and dive into analysis without ensuring alignment. It’s so important to align the work you’re about to do with the stakeholders request, and make sure it actually meets their underlying need.

But many teams are bogged down in tons of data tickets and move too slowly, so even though they obtain valuable insights, their usefulness has passed its expiration date. They might tell Allie, for example, that the spike in conversions she observed came from trending keywords, but by the time they do, those keywords might no longer be trending.

A lack of talent or passion isn’t the cause of these flawed lifecycles. The problem is upstream: Frustrating processes discourage investment, and diminished resources ensure systems never improve. But once you build a better lifecycle, you can shorten cycle time from days to hours – a change that makes supporting the effort a no-brainer.

Systems and frameworks for ad hoc excellence

Once you understand the lifecycle at a high level – including the steps that need more investment and a sense of how it all flows together – you can start building the frameworks and concepts that will support the lifecycle as it runs.

The better these get, and the more you master them, the more efficiently you’ll be able to move through that lifecycle and invest the learnings of each run back into the team as a whole.

Build a culture of data curiosity

Our example of Allie the marketer and her mystery spike in conversion rates illustrates how data curiosity kickstarts the ad hoc analytics flywheel. The data team facilitates and encourages this curiosity, and the results of their investigation – shared and communicated well – flow back to Allie and keep the flywheel spinning.

Data teams want the companies they work in – from practitioners like Allie to executive leaders – to care about data. But caring about data is a double-edged sword that can cut in both directions, resulting in two different situations:

People only care about data in the abstract. If people aren’t curious about data and don’t see it as a real driver of their decisions, the flywheel won’t launch, and the data team will be stuck making dashboards and begging people to use them.

People reduce everything to data. Sometimes, especially given the trendy nature of being “data-driven,” people can assume all information is reducible to data. When this happens, data teams shift from serving the company to becoming a bottleneck on decisions and instincts.

We emphasize data curiosity as a way to feed positive feedback loops around ad hoc analytics and as a way to defray the risk of slipping into either failure mode. Companies shouldn’t avoid asking questions that require referring to data nor should they assume data can answer anything and everything.

To encourage data curiosity instead of data apathy or dependence, focus on three values:

Visibility

Interaction

Motivation

To increase visibility, make data present and easily understandable in as many places as possible. Dashboards are important here, but they’re not everything. Include other data artifacts, from simple, static graphs to more complex models. Embedding interactive artifacts into places where people are doing their work is a great way to do this— Notion, Confluence, or other internal portals.

To increase interaction, meet people where they are via the tools they work with. Build a well-organized knowledge library that you can link to and create Slack channels where people can ask questions and see others ask questions and get answers. Above all, build a data request flow for the data team that feels good for everyone.

To increase motivation, take a nurturing perspective to the data-curious culture you’re trying to build. No individual question matters more than the rate of questions being asked. Who is asking the most questions? Why? Who isn’t asking questions? Why not?

Your goal is a big-picture one, and you’ll serve that goal better by encouraging broad curiosity rather than fine-tuning the questions. The best way to encourage this curiosity is to mulch the results back into the garden you’re growing — share success stories, highlight good ideas, and thank everyone for any attempt at contributing.

At the risk of distracting you with another buzzword, this culture of data curiosity is what “democratizing data” really is. It’s not about building some self-serve valhalla. It’s about making everyone curious about data, then offering them a mechanism for getting answers – and ensuring they benefit from their curiosity.

Maintain tight feedback and iteration loops with stakeholders

For successful ad hoc analysis, feedback loops need to be tight, fast, and accessible. Data teams have to be able to take feedback and quickly turn it around into better processes or better results. The ultimate result, beyond the initial findings being more accurate or aligned, is confidence-building among stakeholders.

Of course, the strategy here can’t be turning the data team into a service org that runs back and forth from Slack to SQL, blindly producing answers. Instead, data teams have to build a feedback loop that:

Validates initial findings.

Aligns stakeholders on research directions.

Investigates while sharing preliminary findings.

Presents findings in a clear, compelling fashion.

When you get an ad hoc request, balance between trusting the instincts of the domain expert and validating the initial findings. Though everyone is looking and hoping for big results, stakeholders will appreciate when you can quickly disconfirm a hunch, too.

If, for example, Allie’s data team could quickly show that the spike in conversions she saw was due to a problem in her analytics setup, then she has the freedom and confidence to pursue her initial ad spend plans without getting distracted by this mystery.

The meat of the investigation takes place next. But it’s crucial to confirm and re-confirm alignment before diving into the depths of an analysis. The first steps of a complex analysis can be super time consuming! Finding new or obscure data sources, refreshing your memory on a schema and its relations, or just running big, slow queries. Without alignment, there’s a huge risk of wasting lots of time, and even a great investigation might not result in the kind of business momentum that supports the feedback loop you’re aiming for.

Three efforts build and maintain alignment:

Before the investigation, align on the research direction. By sharing this and collaborating on it, you’re making the stakeholders feel involved in the work and excited about the results – even if early feedback is minimal.

During the investigation, check in regularly and present preliminary findings as they emerge. Often, stakeholders might not be able to provide much feedback until they start to see the work. Ideally, you’re able to bring stakeholders into the tool you’re using and show them live work without having to download or screenshot things.

As the investigation returns results, keep stakeholders in the loop and make it feel collaborative. In ad hoc analysis, the work is impossible without either a hunch from a domain expert or the research work that investigates the hunch. Resist slipping into a “show and tell” workflow.

Alignment isn’t just something you do at the beginning. This is a cool thing to stop and ponder! Without this deep and frequent alignment throughout the life of a project, data teams can end up taking a question from a stakeholder, turning it into a ticket, stuffing it into a queue, and only presenting results on their timeline and on their terms. The answer, by then, is often little more than a resolved ticket.

At best, the results are useful but the stakeholder feels disengaged and less likely to action on them. At worst, time is wasted because alignment wasn’t maintained and the stakeholder might feel dissuaded from asking next time.

Ultimately, the feedback loop can spin in either direction: Building momentum so more people feel engaged and more work is done or deepening disinterest so few people ask, and ad hoc analysis faces even more deprioritization.

Crossing the Sharing Gap

Ad hoc analysis is high-impact low-hanging fruit, but even within ad hoc work, there is a lower branch just waiting to be plucked: sharing your work in an effective way. We call this the sharing gap, and many teams only think they’re crossing it.

Already, data teams are doing a ton of work and producing a ton of results – especially given the power of the modern data stack. Unfortunately, a lot of this work goes to waste for a few reasons:

Results languish in static, unreproducible screenshots.

Discoveries are spread thin across tools that don’t cross-pollinate.

Feedback loops are opened but never closed.

Complex and meaningful insights never make it out of technical tools into stakeholders hands.

The trouble with this situation is that the solution, at a high level, sounds simple: Collaborate more and share more. Ok, done! But the reality is more difficult and more nuanced; crossing the sharing gap requires more than a running start and a leap.

To see why, let’s return briefly to the fundamental goal of a data team. All too often, it’s easy to get lost in the weeds, to confuse process with result, and feel satisfied simply producing data. But we’re not in the business of producing or even analyzing data. Our job is to build knowledge.

Your data and your analysis could be world class, and you could have spent three weeks on it, but if it doesn’t influence a stakeholder or a business decision it’s ultimately meaningless. Consider the negative case, too: If you don’t communicate your findings well enough, bad decisions can be made on bad interpretations of good data. No one wants this, but ensuring this doesn’t happen requires data teams to be partners and not service orgs.

Crossing the sharing gap isn’t just a step you need to take, but the leg of a table that won’t stand up without it. If your current stack and the workflows around it don’t empower your team to create, save, and share the artifacts of knowledge you’re producing, then it’s long past time to take a hard look at those tools and processes.

Your data and your analysis could be world class, and you could have spent three weeks on it, but if it doesn’t influence a stakeholder or a business decision it’s ultimately meaningless

But before you cross, some advice and a word of warning: Better tools, on a technical level, can sometimes mean one step forward and two steps back. We’ve seen, for example, a data team replace a brittle forecasting spreadsheet with a Python notebook and then seen that team struggle; improving their own technical workflow wound up making collaboration with non-technical stakeholders more difficult.

Every query ran faster in this case, but data analysts had to run all the queries themselves because the stakeholders couldn’t use the notebook. Previously, they were able to tweak and explore themselves in the spreadsheet. To make things worse, the only meaningful “access” the team could offer was exporting the results of a fresh query to a PDF. This is how you wind up with an infestation of “notebook-run-20240430-final (2).pdf” files in your Downloads folder – very difficult to eradicate!

This example demonstrates part of what makes crossing the sharing gap so difficult. On one end of the gap, you’re working with brittle (but sticky!) spreadsheets. They’re not technically advanced and certain tasks might be difficult, but everyone knows how to work a spreadsheet. Even with good intentions, unless you consider all angles you might fall into the gap by building a better but less usable environment.

This is a critical requirement for all projects, but especially ad hoc analysis tools. If a new system doesn’t enable at least as much sharing and collaboration across technical and non-technical people, then it’s fundamentally worse than the previous version.

The ability to share and collaborate on work is not marginal to investigative work – it’s fundamental. As you re-prioritize it, think through the nuances:

Are you crossing the publishing gap between an analyst sharing results with stakeholders?

Are you crossing the collaboration gap between data analysts trying to work together?

Are you crossing the discovery gap that limits anyone in the company from stumbling on a potential lead that could inspire real insight?

The sharing gap is frustrating, and when you consider how thorny it can be, you might revisit your feelings about ad hoc analysis in general. When you and your team feel that angst, when they worry that ad hoc analysis inherently means fragmented tools and slow back-and-forth work, how much of these feelings are really the result of the sharing gap? When you can cross the sharing gap, a big chunk of the ad hoc analysis lifecycle tends to fall into place too.

Tools and workflows

So far, we’ve emphasized philosophies, systems, and frameworks, but this doesn’t mean tools and workflows play a small role. Tools and workflows alone won’t turn you into an ad hoc analysis master, but picking the right tool and designing the right processes can make or break an otherwise well-thought-out plan.

Moving beyond dashboards

As we mentioned previously, many data teams have gotten into a cycle of creating dashboard after dashboard, even for small questions. Many of these sit unused, even by the people who originally requested them. Dashboards don’t need to be killed (google “Dashboards are dead” for an endless list of blogs calling for or predicting their demise), but data teams do need to return them to their rightful role as one tool among many.

Simple BI tools, in particular, are effective but limited in use. They’re great at answering simple historical questions, but they’re not designed for deep ad hoc analysis. Using even the best BI tool for this kind of exploratory analysis is like taking a Ferrari off-roading. It might feel luxurious for a short distance, but you’ll quickly end up stuck.

Notebooks also play an important role, but can’t support ad hoc analysis alone. As we covered in a previous example, the technical capabilities of a notebook-based workflow will be severely limited if there aren’t ways to share and collaborate within the data team and across to stakeholders.

Hex (you’re reading our guide!) combines the best BI features with the best parts of notebooks to give you one collaborative workspace for every data project. It makes it easy to go from a quick question, to a deep-dive analysis, to an interactive app anyone can use. Hex is the fastest, most powerful way to work with data, and the best tool for ad hoc analysis.

Workflows determine tools, not the other way around

As you discover the limitations of your current tools, we don’t advise just going tool shopping. Instead, return to the systems and frameworks we’ve described here, the lessons you’ve learned in your own experience, and think through the workflows you want to build. Your target workflow will always be a better leader than a tool that makes lofty promises about what you can do with it.

To build that workflow, though, don’t hold yourself to perfectionism. Set realistic goals, work toward them, and iterate. The holy grail of wholly self-serve analytics – if it’s even possible – is not arriving tomorrow.

Instead, iterate: Experiment, find gaps, identify what you need to fill them, and make progress. The more you iterate, the more you can tighten your feedback loops and fine-tune your systems.

As your workflow points to potential tooling, focus on thoughtful adoption. There’s been an explosion of data tools in recent years and it’s hard to figure out which tools will empower or extend your team and which will be another monthly bill and little else. Trial things, go beyond demos, and get as many teammates as you can into the tools during your trial.

By nature, most tools in the modern data stack will not be set-it-and-forget-it SaaS products. You need to invest financial resources and ongoing effort to make them a meaningful part of your work:

Onboard your team to the new tool.

Provide them with documentation, guides, and ways to ask questions.

Give them time and space to get comfortable with the new tool.

Teach and share best practices, and adapt those practices to your team’s needs.

Previously, we’ve written about how data teams can struggle with Looker and pointed out how the problems can lie just as much with the team dynamics as the tool. It’s easy to misuse persistent derived tables and not follow strict modeling practices, eventually ending up with model sprawl.

Always examine your processes alongside a tool. Even if you want to migrate to something different, you’ll make a more informed decision if you know the source of your struggles.

Similarly, with ad hoc work, we’ve seen the best results come from teams that focus first and foremost on building and standardizing ad hoc processes. If you design and build templates to reinforce standardization, regularly remind stakeholders to keep in-progress conversations going, and store ongoing work in a central place, your chance of success is exponentially higher.

Throughout all this, remember that you can be scrappy without being sloppy. If you’re oriented toward building a holistic strategy and methodology, even the smallest increments and iterations along the way add up to progress.

Productization pathways for ad hoc work

Throughout, we’ve emphasized the value of ad hoc work as ad hoc work. In Allie’s example, we saw how a relatively short turnaround between question and answer delivered outsized results. But when ad hoc analyses succeed, there’s a fork in the road: Was that all the potential this question had, or is there a bigger unlock?

Sometimes, short-order ad hoc work can lead towards something of production-grade quality. That sounds grandiose, but this can be as simple as turning a static ad hoc outcome into an interactive tool by adding a single dropdown filter.

The transformation from ad hoc to production can take a few forms:

Refactor messy code from an ad hoc project into efficient, clean code that lives in your data model and is exposed in official tables.

Turn a document-style report into a dashboard (if the metric really requires regular monitoring). Let no one say we think dashboards are dead.

Create an interactive self-serve-on-rails tool for stakeholders, so they can slice and dice the data you captured in a variety of ways and access new results regularly.

These efforts pay dividends. You have the results of the initial ad hoc analysis, which, as we’ve said, are already more beneficial than they’re often given credit for, but you also have the ongoing benefits of a more evergreen tool.

We have a very broad product, and we serve seven different product teams. We could not do this analysis for every single product team, so it was really important to operationalize this initial analysis that we did and turn it into an interactive app so stakeholders can self-serve their own analysis. And that’s exactly what we did.

In this way, we circle back around to dashboards and “proactive” systemic work. It’s always been valuable work, but it’s often most effective when it emerges from ad hoc analysis that’s already established demand and proven its usefulness.

Conclusion

Ad hoc analysis is a fact of life for all data teams, and it almost universally inspires dread when it should really inspire excitement. The data world has evolved in leaps and bounds in recent years, but the business impact that comes from data will stall if data teams get mired in creating dashboards and treating data as an end in itself instead of a means to the production of knowledge. Ad hoc analysis works for delivering ROI, perhaps better than any other type of project, but it only works as well as you want to make it work.

It requires investment–time, resources, and effort–but the investment pays off deeply and broadly. The dividends are deep because the insights earned from these investigations will shape business decisions better than any other kind of project, and the dividends are broad because ad hoc analysis will push the entire company, over time, to care more about data and to become curious, every day, about what data can do.

The keys to success are simple, even if the work is nuanced:

Build systems and frameworks that support and encourage ad hoc analysis.

Focus on building and reinforcing feedback loops that encourage iteration and collaboration.

Adopt tools and workflows that support your work rather than bending tools against their original purposes.

If you remember nothing else, take this one thing away: If you embrace ad hoc analysis, the nature of the work itself will change and improve. It’s good for the business, yes, but it’s good for your team, too.

At Hex, we’ve spent years working on a platform that puts queries, notebooks, reports, interactive apps, and cutting edge AI into one collaborative workspace. We believe it’s the best tool for any data analysis project, especially deep ad hoc explorations. But a rising tide lifts all boats— the more ad hoc analysis done, the better, whether you adopt Hex or not. But hey, if you’ve read far enough to buy into this whole ad hoc analytics thing, then it might be worth checking out a tool built by the people who believe in all this.

If this is is interesting, click below to get started, or to check out opportunities to join our team.