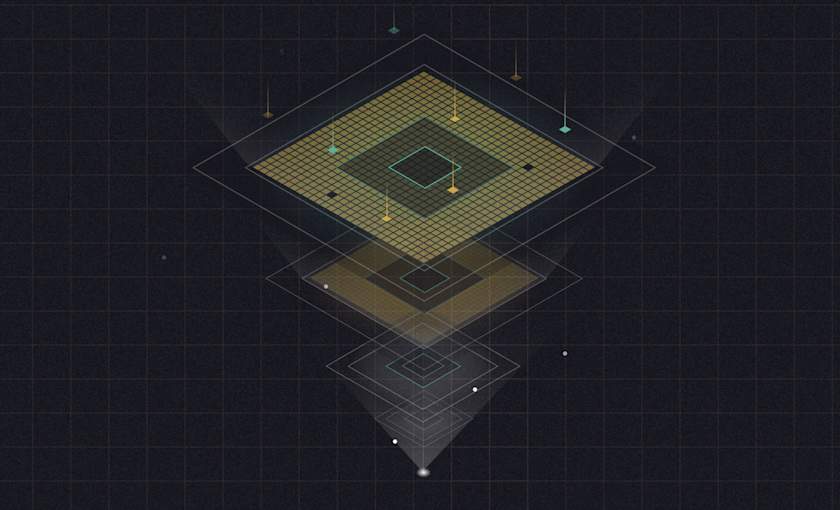

One of the magical things about Hex is its "multi-modality" – the ability to stitch together various ways of working with data into one platform. Our unique compute architecture makes this possible by expressing all cell types as interoperable “lazy dataframes“ that can be executed across different backends, including our DuckDB-powered in-memory engine, pushed down to a data warehouse, or in the Python kernel. This flexibility means you aren't constrained to the Python kernel's memory if you're working in SQL or no-code.

But many data science and ML workflows do require more kernel compute. Model training, for instance, can involve massive training datasets and often benefits from distributing training jobs across multiple cores to speed iteration cycles. Our original 8GB RAM, single-CPU compute profile isn’t always up to the task.

New power for your most complex workflows

That’s why we’re introducing Advanced Compute Profiles in Hex. Now Team and Enterprise customers have access to more firepower – up to “4XL” (16 CPUs and 128GB RAM) and “V100 GPU” (1 V100 GPU, 16GB GPU RAM, 6 CPUS, 56GB CPU RAM).

Together with our warehouse, S3, and Google Drive integrations, advanced compute gives you everything you need to take on model development (or any compute-intensive workflow) from the comfort of your Hex notebook. No need to export millions-of-rows datasets to another tool or struggle with environment reconfiguration.

Advanced Compute Profiles in Hex come with a new “idle timeout” setting, which adjusts how long notebook kernels stay alive after the project run has completed. You can reduce it down to 15 minutes if you’re already pickling or writing your Python state to CSVs and looking to minimize compute costs — or if you need a longer grace period, you can extend idle timeout up to 24 hours.

This is just one of many options for powering compute-intensive workflows in Hex. Snowpark ML and Modelbit, for instance, are two great solutions that we’ll continue to support, among others. You can also mix-and-match: for example, use out-of-the-box Hex advanced compute to quickly prototype and test different models, features, and training datasets, before pushing the end result to another system to run inference workloads.

Only pay for what you use

Advanced compute is charged on a simple, pay-per-minute model. Need the firepower? Up the compute profile size and get to work. Done with the heavy stuff? Scale down, or stop your kernel and your bill will too!

Of course, we also built new Admin controls to ensure predictable spend and observability. Admins can set per-billing cycle spend limits, view detailed usage logs, and restrict advanced compute access to certain user groups (for example, your data science team). For more details on all of this, check out our docs.

Bring it all together in Hex

Our new compute options help you minimize tool sprawl and streamline your advanced data science and ML workflows. It's easy to set up, effortless to collaborate, and makes iterating on resource-intensive projects fast.

Admins can enable advanced compute for their workspace in Settings > Compute.

Advanced compute profiles are only available on Team and Enterprise plans.

If this is is interesting, click below to get started, or to check out opportunities to join our team.