Blog

Why data teams (almost) never have to answer the same customer question twice

How you can break free from repetitive customer questions

Every data team knows the pattern. It starts innocently enough on Monday morning.

"Hey, can you pull Customer X's usage data? Their renewal is coming up and usage looks down."

By Monday afternoon: "Actually, can you break that down by feature?"

Tuesday morning: "Wait, can you also compare them to similar customers in their cohort?"

Wednesday: "What about their support tickets? Maybe there's a correlation?"

Thursday: "One more thing — can you segment by user role?"

Each iteration means another query, another context switch, another delay to your strategic projects. Your analysts become human API endpoints, fielding an endless stream of evolving questions that morph with each answer.

This back-and-forth investigation pattern consumes enormous amounts of time. But here's where it all starts to add up: even after all that effort, stakeholders often discover patterns the data team might have missed. Not because analysts aren't skilled — but because the people closest to the business bring context that transforms how they interpret the data.

The limitations of traditional Customer 360 dashboards

The traditional answer has been Customer 360 dashboards — comprehensive views that consolidate customer metrics, usage patterns, support history, and billing data into a single pane of glass. Pack every conceivable metric into a BI dashboard and call it done. But these static views fail for a fundamental reason: investigation isn't linear — your questions branch out based on what you discover.

You're left with two problematic options:

Give everyone SQL access (chaos ensues)

Keep fielding requests (burnout ensues)

Leading data teams have pioneered a third way: design for question evolution from the start with data apps. Ramp built one. We did too.

Building data apps for customer success questions

To build for question evolution, it’s best to start by building coverage on the most common customer questions that the data team gets. By mapping the patterns in questions and building interactive data apps around them, you can give stakeholders the power to investigate while maintaining governance.

1. Start by bringing in as much data related to customers as possible

Start by pulling in as much “360” data as possible, including billing data, product data, CRM data and support data into your project. When you bring all of the data in one place, the iteration on the front-end will be quick.

2. Design your app with the most common questions that the data team gets

Start by answering the most common questions the team gets. You may want to consider when anomalies happen like:

Usage dropped 30% month-over-month

Support tickets spiked above normal levels

Feature adoption stalled after initial rollout

Revenue declined despite contract expansion

For each trigger pattern, you could build a focused view in the data app with that specific investigation in mind. When building a data app to help teams investigate, begin by prototyping in a notebook environment. Interview your customer success managers about their most common questions, shadow their investigation process, and identify the decision trees they follow. Then translate those patterns into an interactive experience.

2. Enable guided exploration with guardrails

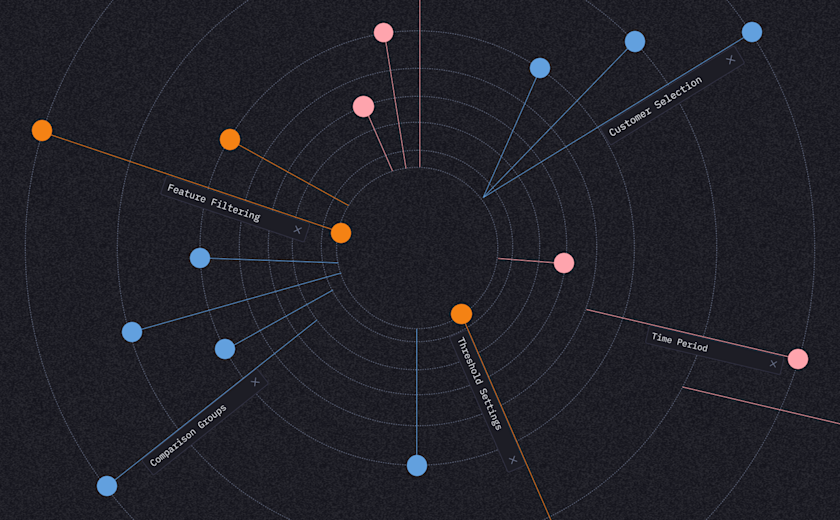

Modern data platforms allow you to create input parameters that automatically filter all downstream analysis. First, identify the key dimensions customer success needs to explore. Here are a few examples:

Customer selection: Which account to investigate

Time period: Date ranges for trend analysis

Feature filtering: Specific product areas to examine

Comparison groups: Peer accounts or cohorts

Threshold settings: What constitutes "abnormal"

Then implement these as interactive inputs that update all analyses automatically. The power comes from stakeholders refining their view without writing code. When stakeholders change inputs, everything updates — queries, analysis, and contextual insights. This transforms the investigation experience. They're not learning a new tool — they're following their investigative instincts with guardrails you've provided. When inputs change, everything responds in sync: queries, analysis, and contextual insights adjust instantly. This transforms the entire investigative experience. Stakeholders aren’t learning a new tool; they’re simply following their instincts—guided by the thoughtful guardrails you’ve provided.

3. Build in business context automatically

Once you've designed the app, it may be worth doing a bit of extra work to add more context. Stakeholder questions can stall because metrics lack context. Is a 30% usage drop seasonal or signal churn risk?

Examples of context that you could layer directly into the app include:

Historical patterns: "This customer typically sees 20-40% drops in December."

Cohort comparisons: "Similar-sized customers average 15% month-over-month growth."

Correlation callouts: "3 support tickets filed the same week as usage decline."

Account context: "Customer is 60 days from renewal."

Account activity: "A training happened last week, so there was a spike in usage.”

4. Make insights persistent and shareable

The worst outcome of any investigation is when there is no action taken or decision made! Ultimately, the work of apps like these is to increase the understanding of a problem so that your can do something with it. Modern data apps, like those built in Hex, solve this with built-in collaboration:

Investigation memory features in Hex:

Subscribe to apps and set up notifications for when it runs

Comment threads on specific findings

@mentions to loop in team members

Version history showing investigation evolution

Saved filter combinations for common scenarios

To capture investigation context:

Include note-taking capabilities within the app

Auto-generate investigation summaries

Create investigation templates for common patterns

Build team knowledge bases from past investigations

This creates organizational memory. The next time someone has a question about a customer, they'll see the previous findings and build on them rather than starting from scratch.

Traditional BI Workflow | Data App Workflow |

|---|---|

Analyst receives request | Customer success manager opens app |

Writes custom SQL query | Selects customer from dropdown |

Exports to spreadsheet | Views pre-built analysis with context |

Manually adds comparisons | Adjusts filters to explore angles |

Emails static screenshot | Shares interactive findings with team |

"Can you also check..." email arrives | Investigates independently |

Repeat 5x over 3 days 🔄 | Complete in single 20-minute session |

Implementation principles for your first app

Based on patterns across successful implementations:

Choose the right first use case

Pick a painful, repetitive investigation pattern

Start with 3-5 key questions CS asks weekly

Focus on one clear outcome (renewal risk, expansion opportunity, health score)

Design for exploration, not just viewing

Include 3-4 interactive filters maximum to avoid overwhelming users

Pre-build the most common investigation paths

Add "why might this happen?" context throughout

Involve customer success throughout

Shadow their current investigation process

Test prototypes with real scenarios

Iterate based on which features they actually use

Measure what matters

Time from question to answer

Number of follow-up requests

Actions taken based on findings

CS team satisfaction scores

Start small: One pattern, one app, endless time saved

Once stakeholders experience the power of guided investigation, adoption spreads naturally. Customer success teams will reduce asking for reports and start finding answers. When that shift happens, data teams finally focus on building tools that scale their impact rather than fielding an endless queue of one-off requests.

The transformation is gradual but profound: your data team evolves from service desk to platform builder, and your customer success team gains the autonomy to investigate with confidence. That's when both teams become truly strategic.

If this is is interesting, click below to get started, or to check out opportunities to join our team.