Hex is built to be an extremely powerful, flexible tool to build almost anything with data. We’ve architected around the “cell” paradigm first popularized in notebooks, but taken it to an extreme: we have dozens of cells that handle everything from querying data and writing code to building visualizations and configuring UI inputs.

People use these to build some incredible analyses and data apps. But it also means that Hex projects can have a lot going on, with hundreds of cells with rich UI and interactive visualization all working together. This adds up, and as the Hex product and our user base kept growing, we saw progressively more challenges in having a performant, delightful user experience.

Performance optimization in browsers

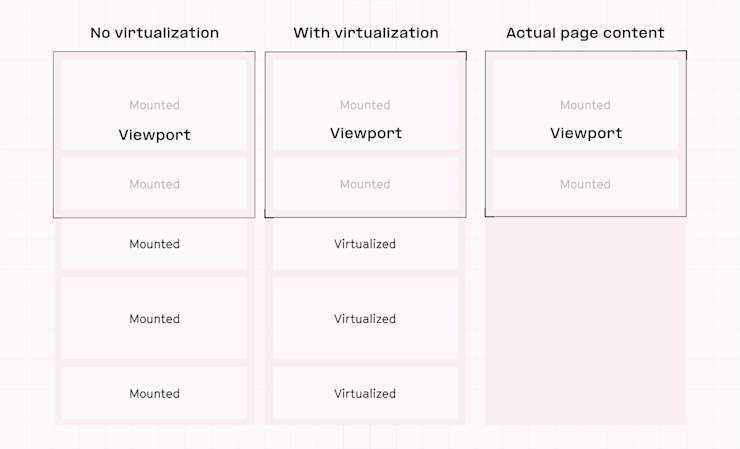

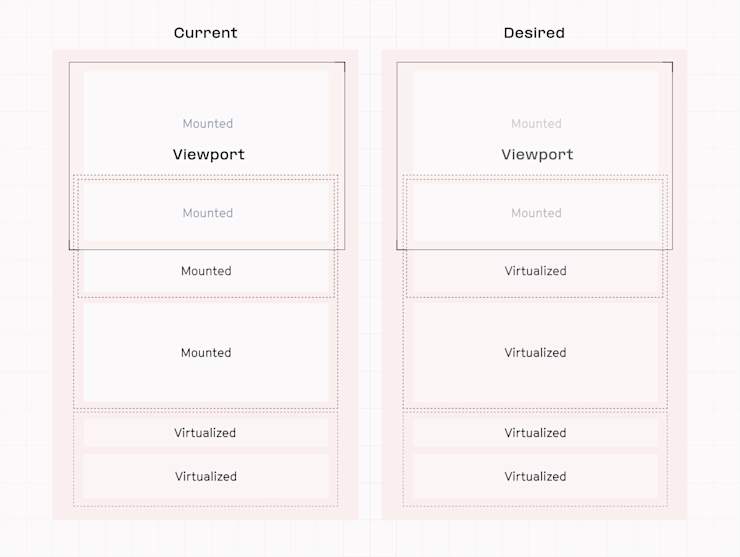

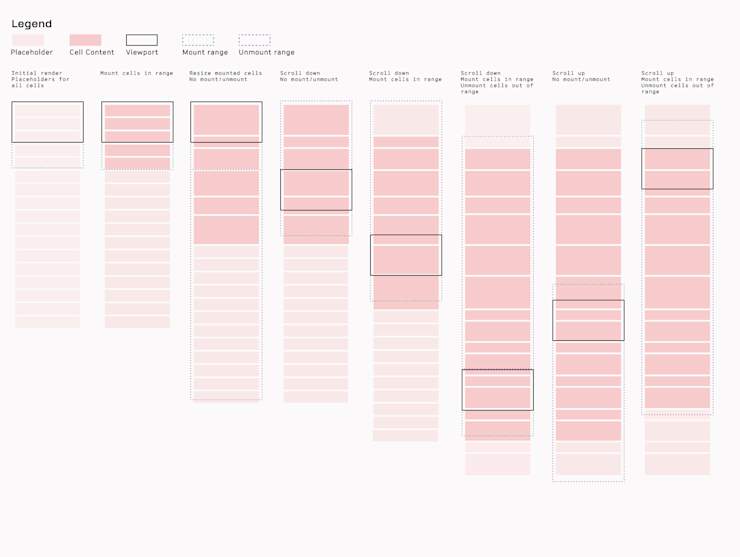

Of course one solution here is virtualization, trying to only render things visible in the viewport, and freeing the client resources to do other stuff.

Early on, we turned to React Virtuoso for virtualization. It’s fantastic for efficiently rendering large lists — why not leverage it for cells? By rendering only visible elements, Virtuoso reduced rendering loads for our biggest notebooks and improved performance.

It worked well in some ways, but with some caveats: scrolling was jittery and and reliably remedying it was a challenge. We used it for only our largest notebooks, where the benefits outweighed the quirks. Smaller notebooks performed fine without Virtuoso, but that was short-lived. We were forced to confront the problem.

Scrolling by a problem

We don’t blame Virtuoso for our scrolling woes. The many ways in which Hex cells were resized — by users, their collaborators, or kernel operations — probably wasn’t doing us any favors. Virtuoso does support mixed-size content and resizing, so it was likely solvable. Unfortunately this wasn’t our only problem!

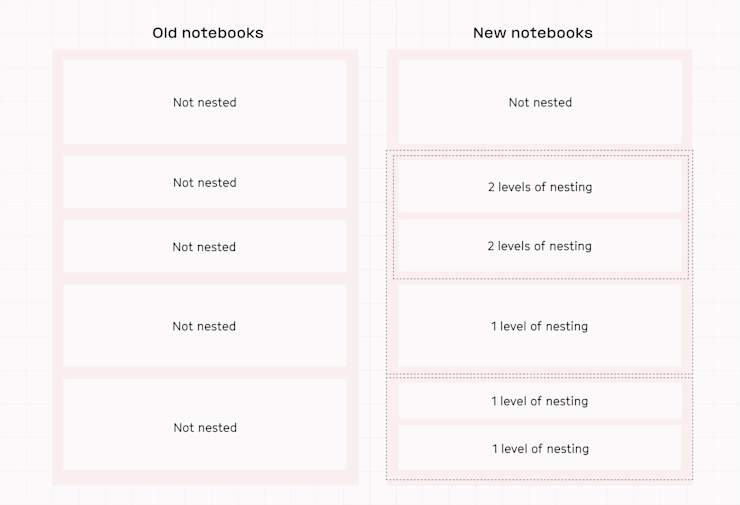

Virtuoso was designed for lists. Huge lists. Infinite lists. Modern Hex notebooks more closely resemble trees — hierarchical rather than linear — on the order of hundreds of cells at most and increasingly deeply nested.

Virtuoso supports some nesting — at the time of writing, one level deep. This wasn’t going to cut it. Users nested cells within sections inside components, sometimes within more sections. If the viewport happened to scoop up a container with deeply nested content, we might be forced to render all of it at once.

Virtuoso orchestrates virtualization from a specialized list container that controls the mounting behavior of its contents. Could we place control over mounting behavior in the hands of the cells themselves? Regardless of how deeply nested they were in the tree?

Given 10,000 elements, it would be expensive to delegate that decision. We could take advantage of our domain — notebooks with (at most) hundreds of cells — and reap the benefits.

Reading the tea leaves: building our own virtualized element

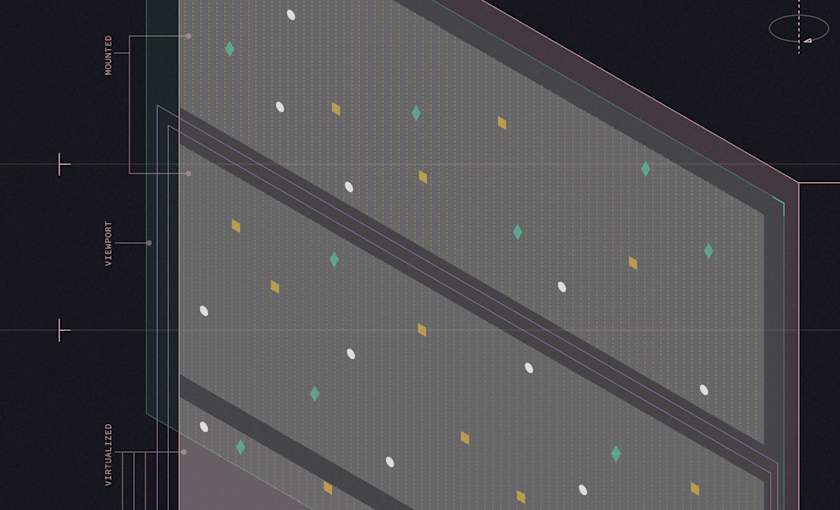

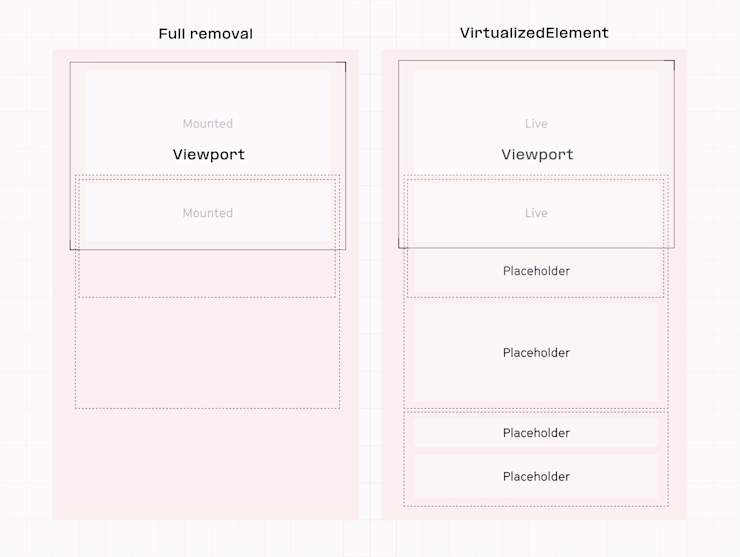

Rather than wield a virtualized container to mount and unmount elements from a list, we built a self-contained virtualized element. Any React component — but most importantly cells — should be virtualized or rendered depending on their proximity to the viewport alone. Each cell making this decision independently ensured that nesting wasn’t an issue.

Virtualized cells are replaced with correctly-sized placeholders to retain a skeleton for the notebook to hydrate. If there are 100 cells, there should be 100 virtualized elements — each deciding whether it’s time to mount its contents. By making the size of the notebook more stable, we could offer a better scrolling experience. No magic to fake the height of the page — the elements are there!

react-intersection-observer was already in use elsewhere in the codebase and became a building block for the implementation. Mounts needed to be conservative as they’re expensive. Once mounted, we could be liberal with our retention to minimize how often we have to re-mount as users go about their work. To accomplish this, we used two separate observers: mount and unmount.

Distinct observers allow us to independently control the schedule of mounting and unmounting. We trigger mounts for cells that are within 500 pixels of the viewport, and trigger unmounts (and placeholders) for cells that are more than 5,000 pixels of the viewport. The result is a 4,500 pixel window of cells with visible content, through which users can scroll without triggering any mounts or unmounts.

Before hiding content, the intersection observer provides us the actual height which we can then use for placeholders. A simplified version of our implementation expresses all of the above fairly concisely:

import { useInView } from "react-intersection-observer";

export const VirtualizedElement = ({ children }) => {

const [contentVisible, setContentVisible] = useState(false);

const [elementHeight, setElementHeight] = useState();

const { scrollRoot } = useContext(VirtualizationContext);

const handleEnter = (inView, intersection) => {

if(inView) {

setContentVisible(true);

setElementHeight(intersection.boundingClientRect.height);

}

};

const { enterRef } = useInView({ scrollRoot, handleEnter, margin: "500px 0" });

const handleExit = (inView, intersection) => {

if(!inView) {

setContentVisible(false);

setElementHeight(intersection.boundingClientRect.height);

}

};

const { exitRef } = useInView({ scrollRoot, handleExit, margin: "5000px 0" });

return (

<div ref={(el) => { enterRef(el); exitRef(el); }}>

{contentVisible ?

children :

<Placeholder height={elementHeight ?? 200} />

}

</div>

);

}A simplified implementation of VirtualizedElement.

Raking in the benefits

Our custom-built VirtualizedElement offered a tidy, generalized solution designed for our domain — how much did it pay off? Front-end performance improved measurably across the board!

90% reduction in initial render time for the notebook

33% reduction in interaction lag (latency of user interactions)

25% reduction in page load (until network requests and DOM changes are complete)

14% reduction in render lag (long animation frames observed during scrolling or animations)

10% reduction in memory usage

Conclusion

Although not appropriate for every use case, VirtualizedElement is a great fit for Hex notebooks. Building it offered us direct performance wins as well as an opportunity for our team to deep-dive on our product needs. We’re much better prepared for the road ahead!

If this kind of problem solving interests you, let us know! We’re hiring.

If this is is interesting, click below to get started, or to check out opportunities to join our team.