A quick guide to Feature Selection

Feature selection, an essential phase in the machine learning (ML) pipeline, is the process of selecting the most relevant variables or features in a dataset to use for model training. The process offers a variety of advantages in model development and performance optimization. It plays a vital role in data preprocessing, transforming raw datasets into refined inputs conducive to accurate, reliable model learning. Feature selection enhances ML models by aiding interpretability, reducing overfitting, improving accuracy, and reducing computational costs.

Enhanced Model Interpretability

The inclusion of irrelevant features in a ML model can render it complex, difficult to interpret, and unreliable. Feature selection simplifies models by removing unimportant or redundant features, making them more understandable. This can be especially beneficial in fields where interpretability is crucial, like healthcare or finance, where predictions often require explanations to stakeholders or regulatory bodies. A model that is easier to explain also enables data scientists to gain better insights, empowering them to fine-tune it more effectively.

Reduced Overfitting

Overfitting is a common problem in ML, where a model learns the training data too well, including the noise and outliers, resulting in poor generalization to unseen data. When irrelevant features are present, overfitting is more likely to occur due to an increased complexity of the model. Feature selection reduces the chance of overfitting by minimizing the model's complexity, thereby improving its capacity to generalize to new data.

Improved Accuracy

The accuracy of a ML model is largely dependent on the quality of input data. The inclusion of irrelevant or redundant features can lead to decreased model performance due to noisy data. Feature selection mitigates this by ensuring only relevant features, which contribute meaningfully to the output, are included. This often leads to an increase in model accuracy, making predictions more reliable and useful.

Reduced Computational Cost

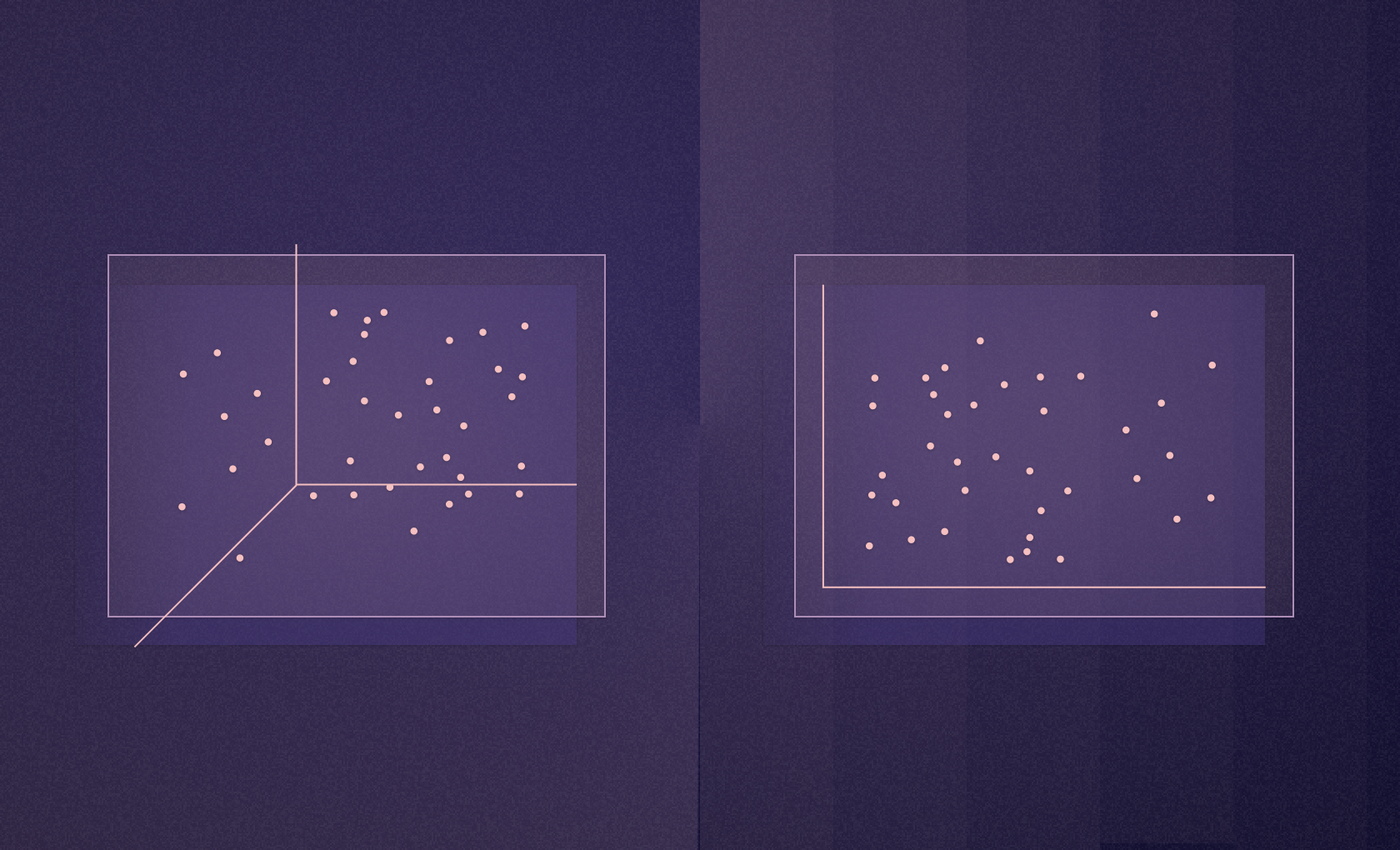

ML models can become prohibitively expensive in terms of computation and resources when dealing with high-dimensional data. Each additional feature can significantly increase the training time and memory requirement. By identifying and retaining only the significant features, feature selection can drastically reduce the dimensionality of the dataset. This, in turn, accelerates the model training process and reduces memory requirements, making it feasible to train models on devices with limited computational resources.

Better Handling of Multi-collinearity

In datasets with multiple input features, it is not uncommon for some features to be highly correlated with each other, a condition known as multicollinearity. This can lead to instability in the model's estimates and decrease the model’s performance. Feature selection techniques can help in identifying and removing these redundant features, ensuring that each feature included in the model adds unique information.

Feature selection is a pivotal step in ML model development that helps to enhance interpretability, reduce overfitting and computational costs, improve accuracy, and handle multicollinearity. While the process may introduce an additional layer of complexity in the ML pipeline, its benefits significantly outweigh the costs, leading to more reliable, efficient, and interpretable models.

See what else Hex can do

Discover how other data scientists and analysts use Hex for everything from dashboards to deep dives.

FAQ

To perform feature selection, you can leverage various statistical methods, machine learning algorithms, or a combination of both. Common techniques include filter methods (like variance thresholding or chi-squared tests), wrapper methods (like backward elimination, forward selection, or recursive feature elimination), and embedded methods (like LASSO, Ridge, or ElasticNet regression). Tools such as the Python library sklearn offer a wide range of feature selection methods to use.

Deep learning methods for feature selection often involve training a neural network on the data and using the learned weights or activations of the network to determine feature importance. Techniques such as autoencoders can be used for both feature extraction and selection. More recently, methods that leverage the interpretability of neural networks, such as permutation feature importance or saliency maps, are used to identify important features in deep learning models.

Feature selection should ideally be performed before feature scaling. This is because feature selection is a process of picking the most relevant features for your model, while feature scaling is a technique to standardize the range of input features. Performing feature selection first reduces the computational effort of scaling less relevant features.

The advantages of feature selection include improved model interpretability, reduced overfitting, decreased training times, enhanced model performance, and efficient handling of multicollinearity. It enables the creation of simpler models that are easier to understand and explain, which are more efficient computationally and often provide superior predictive performance.

Feature extraction and feature selection are both techniques used in dimensionality reduction. Feature selection involves choosing the most relevant features from the original dataset, while feature extraction involves creating new features from the original data, typically by using a transformation or other algorithm. Both techniques aim to reduce the dimensionality of the dataset while retaining the important information for predictive modeling.

Feature selection is essential for removing irrelevant or redundant data from your dataset. It helps to simplify models, making them easier to understand and faster to train. By focusing on the important features, it enhances the model's predictive performance and robustness, making it less likely to overfit to the training data.

Feature selection is crucial in machine learning as it helps to reduce overfitting, enhance model interpretability, improve accuracy, and reduce training times. By identifying and using only the most relevant features for model training, it aids in building more robust and generalizable models while reducing computational complexity.

Can't find your answer here? Get in touch.