Exploratory Data Analysis

Izzy Miller

Exploratory data analysis in Hex is like no other tool you've used before. SQL, Python, rich text, and a library of no-code tools are right at your fingertips in the same...

How to build: Exploratory Data Analysis

Exploratory data analysis (EDA) is a technique used by data professionals to understand the data, its structure, the relationship among features, and other relevant information. EDA can help you to identify outliers, missing values, anomalies, and unknown relationships in the dataset.

It is also incredibly laborious. It requires meticulous scrutiny of each variable and its interactions within the dataset. Often, the sheer volume and complexity of the data can be overwhelming, leading to significant time investment and technical challenges. Furthermore, EDA is not just about running statistical tests or plotting graphs; it involves a deep understanding of the data context, requiring critical thinking and domain expertise. This can make EDA a daunting task, especially for beginners or those working with unfamiliar or particularly intricate datasets.

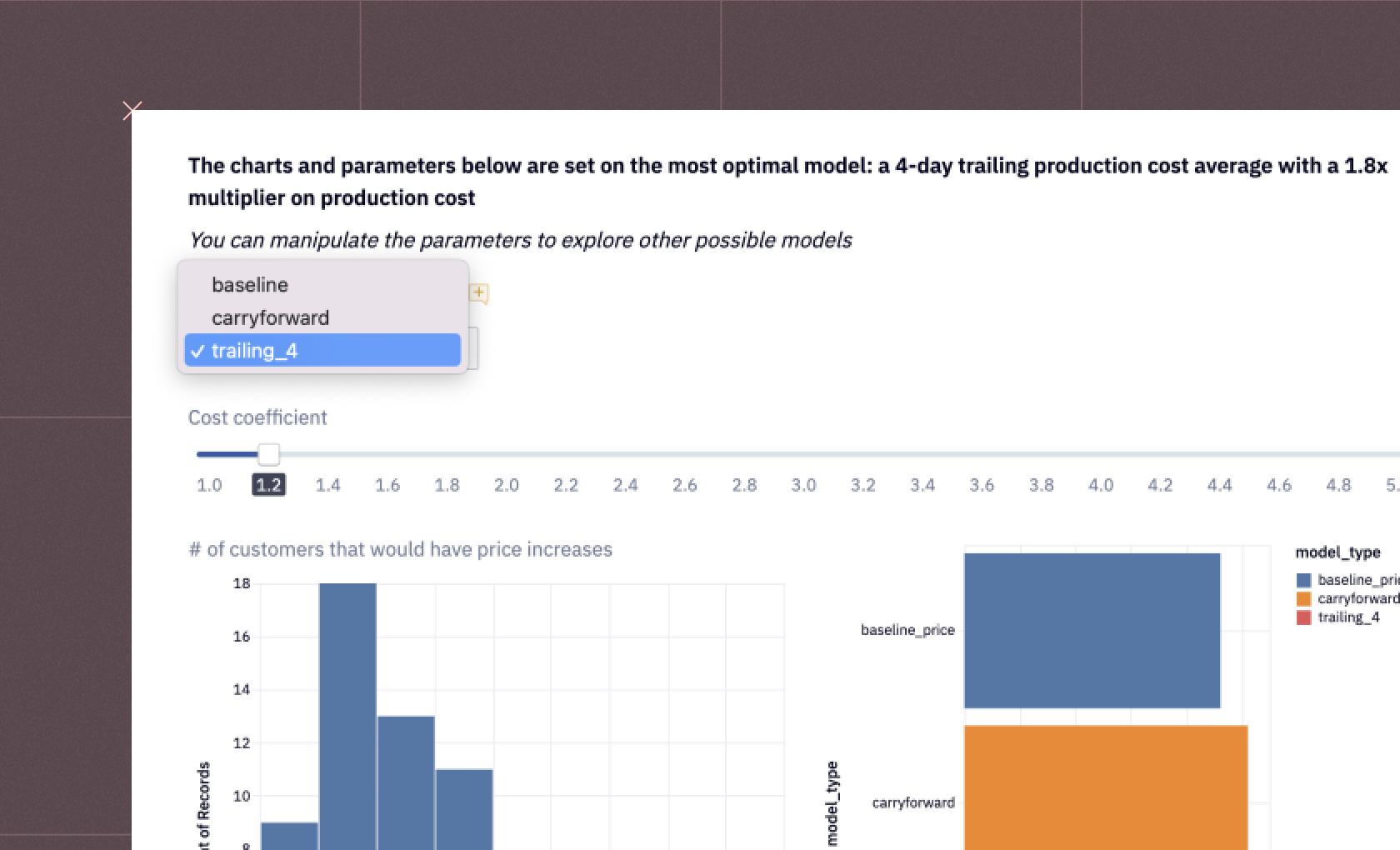

However, we can streamline the EDA process with the right tools and approaches, such as using Hex, making it more efficient and accessible. Here, we want to show you how Hex can simplify EDA, helping data professionals unlock valuable insights from their data with greater ease.

Importance of EDA

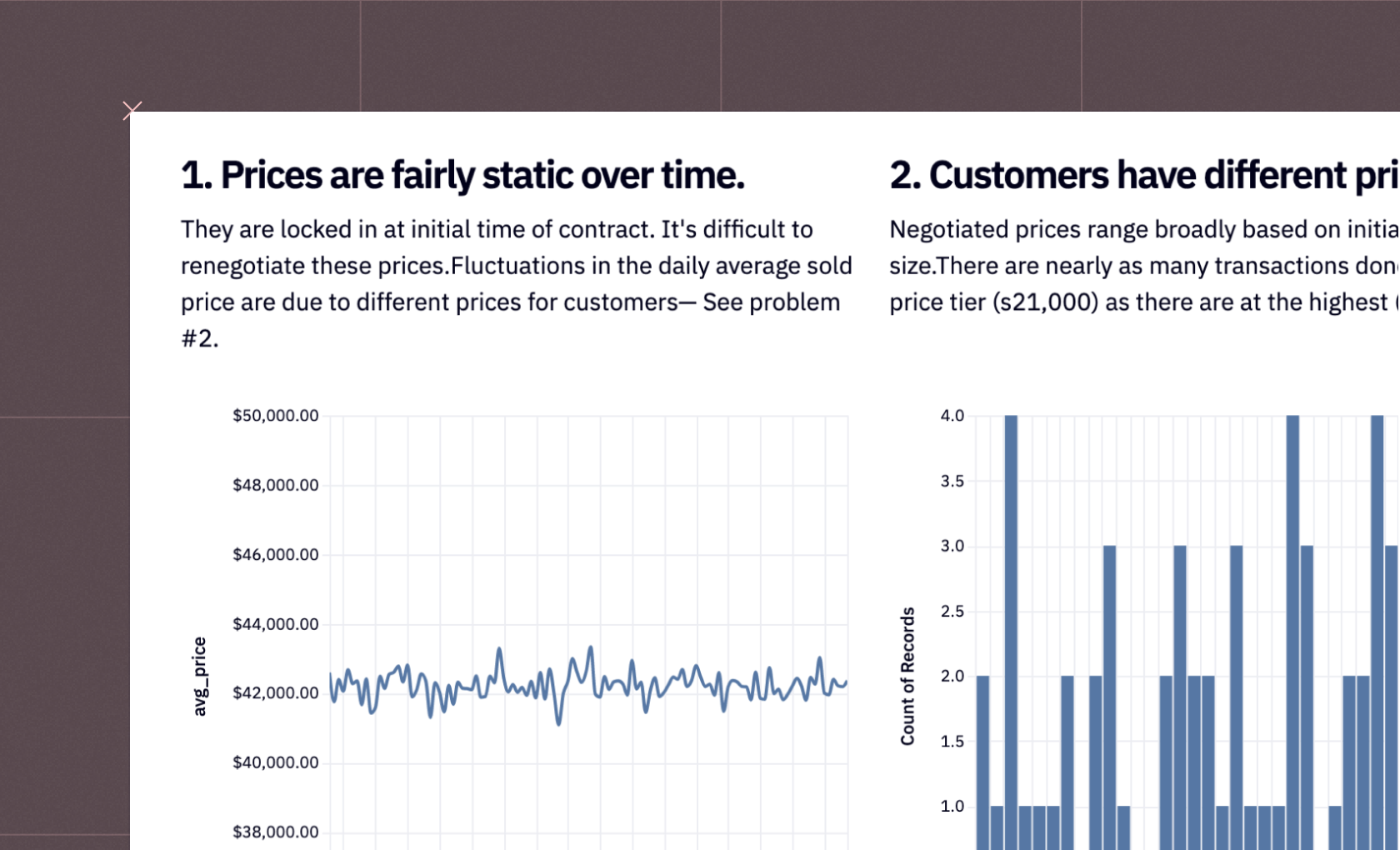

By visually representing the data through graphs and charts, EDA helps identify patterns, trends, and relationships between variables, extracting valuable insights that might go unnoticed in raw data. Moreover, EDA helps assess our assumptions and statistical models, ensuring the accuracy and reliability of the analyses.

One common misconception about EDA is that we just need to create many aesthetically pleasing visualizations. However, it is more about preparing the data and visualizations that can answer the most relevant questions, leading to effective data-driven decision-making.

In this article, you will see a practical implementation of EDA on the Food and Weather dataset using Python and Hex. This dataset contains information about the weather and famous food places in different cities. We will try to answer some of the most common questions that will help us make informed decisions.

Installing and Importing the Necessary Packages

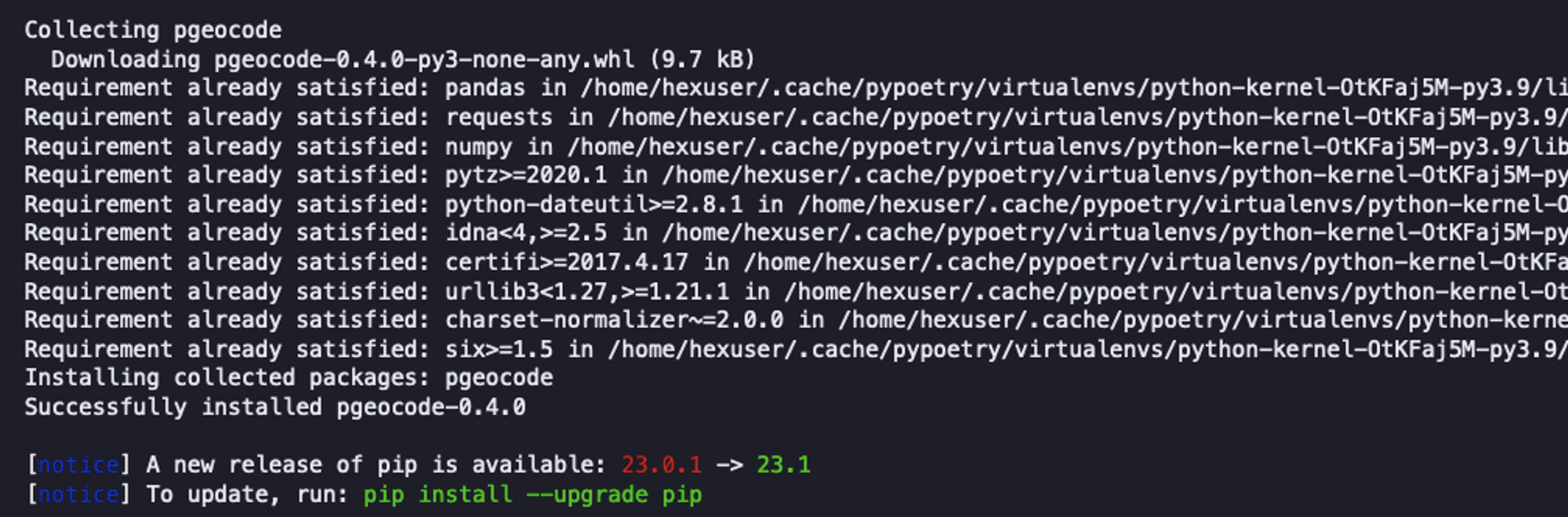

For this tutorial, we will be using Python 3.11 and Hex as a development platform. Hex already has a library of pre-installed packages, but to install a new package, you can use the Python Package Manager (PIP) as follows:

! pip install package_nameFor example, one of the important libraries that we will need is [pgeocode](<https://pypi.org/project/pgeocode/>), a library that contains information about postal codes and coordinates. You can install it as follows:

! pip install pgeocode

Once installed you can import the package to the hex environment as follows:

import seaborn as sns

import numpy as np

from scipy.stats import pearsonr

import warnings

import pgeocode

warnings.filterwarnings("ignore")We have imported the following set of dependencies:

Numpy: Package used for scientific computing in Python.

Scipy: It is a Python package that includes modules for statistics, linear algebra, signal and image processing, and more.

Seaborn: A famous Python library used for appealing visualization.

Pgeocode: A Python package that allows for extremely fast offline querying of GPS coordinates, and region names from postal codes.

Data Collection and Initial Exploration

Now that you have loaded all the dependencies, it’s time to load the data and do some initial exploration.

Sources of Data

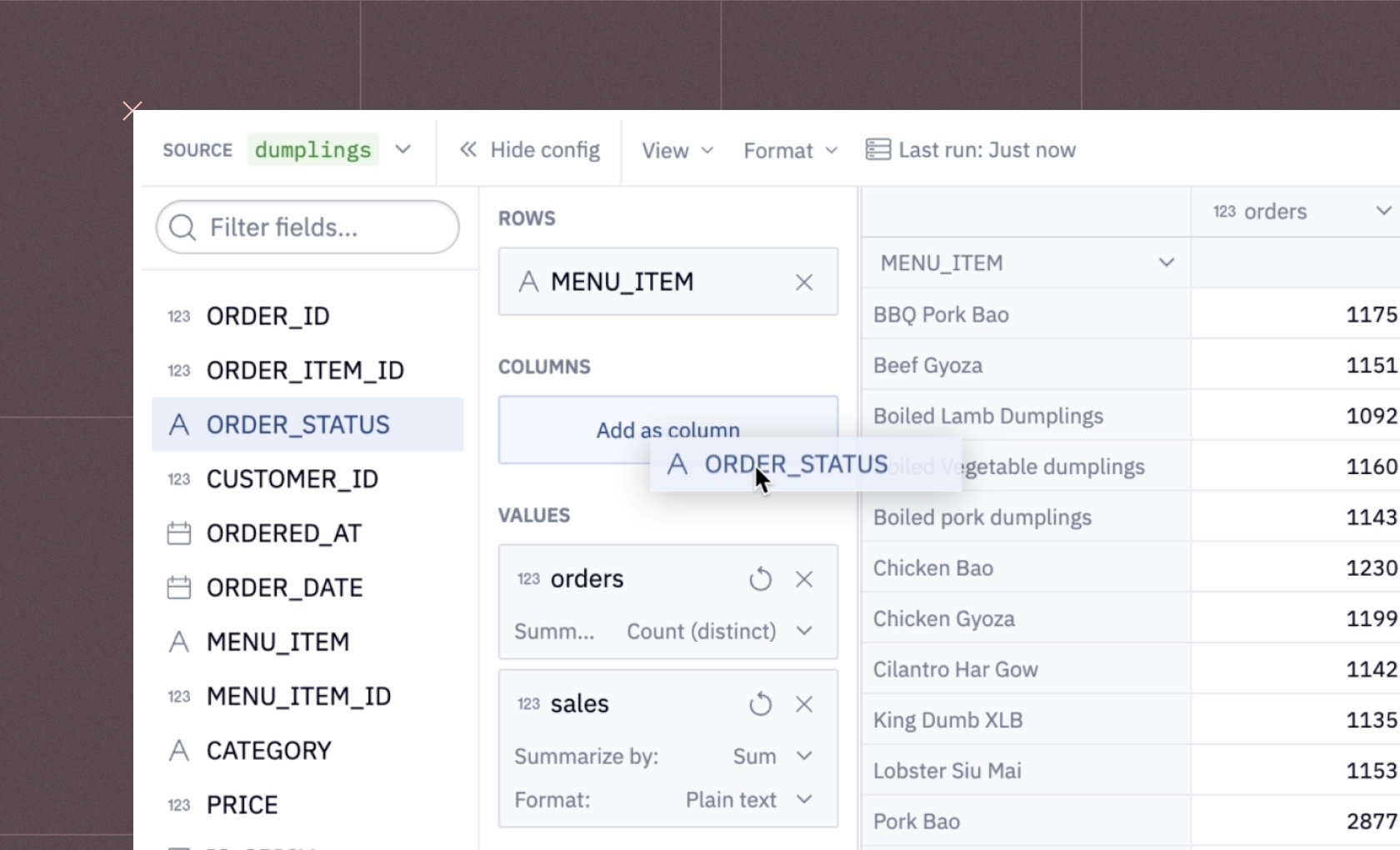

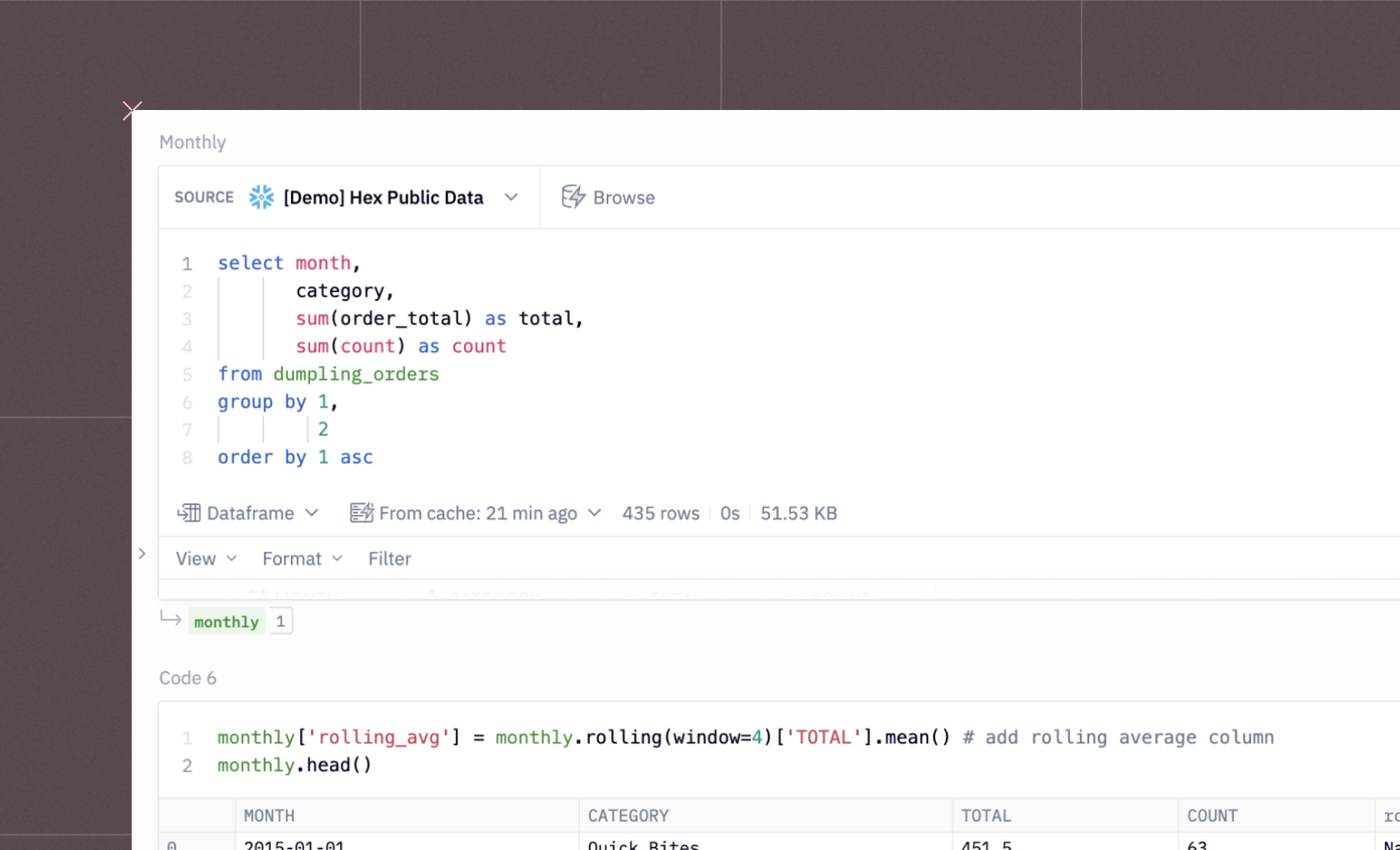

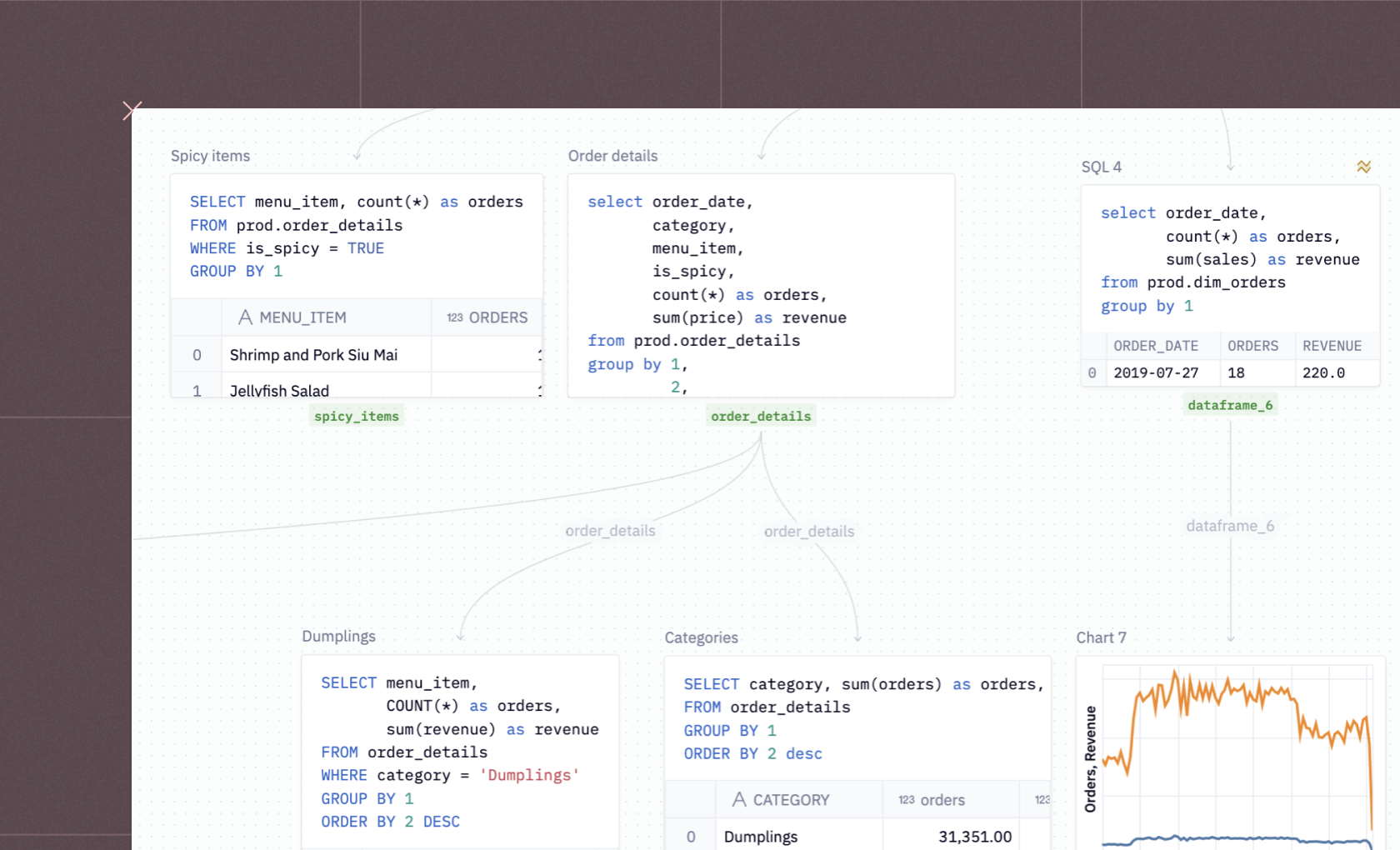

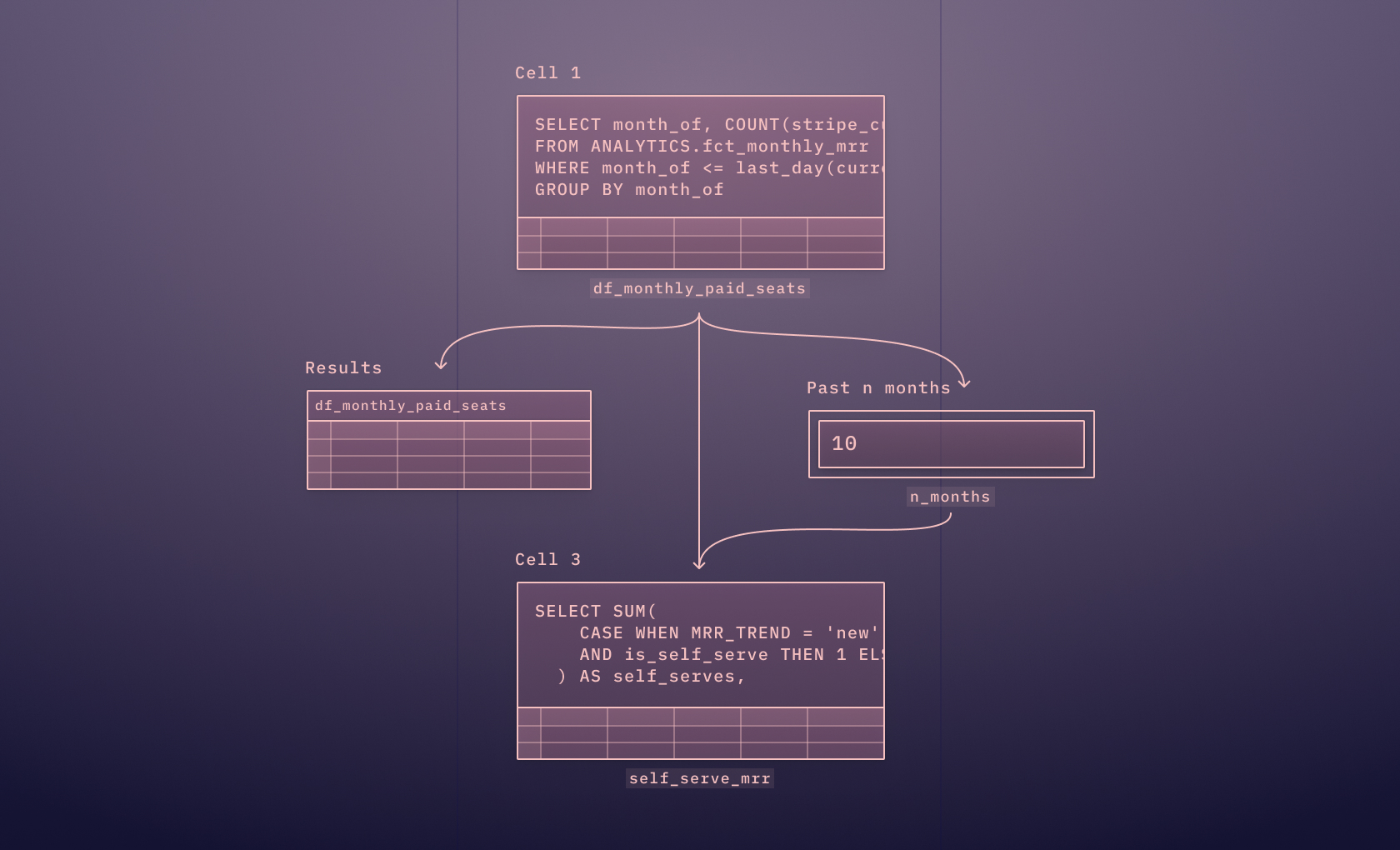

There are various ways to load the data in Hex, you can use a file uploader, or establish a connection to different databases and warehouses. We will load the data from a Snowflake Warehouse. It is as simple as writing an SQL command and the result will be loaded as a Pandas Dataframe.

Note: Since Hex allows us to load the data as a DataFrame it becomes easier for us to manipulate the data.

To load the data from the Snowflake warehouse, you can use the following SQL command:

select * from demo_data.demos.food_and_weather

Collect Basic Information

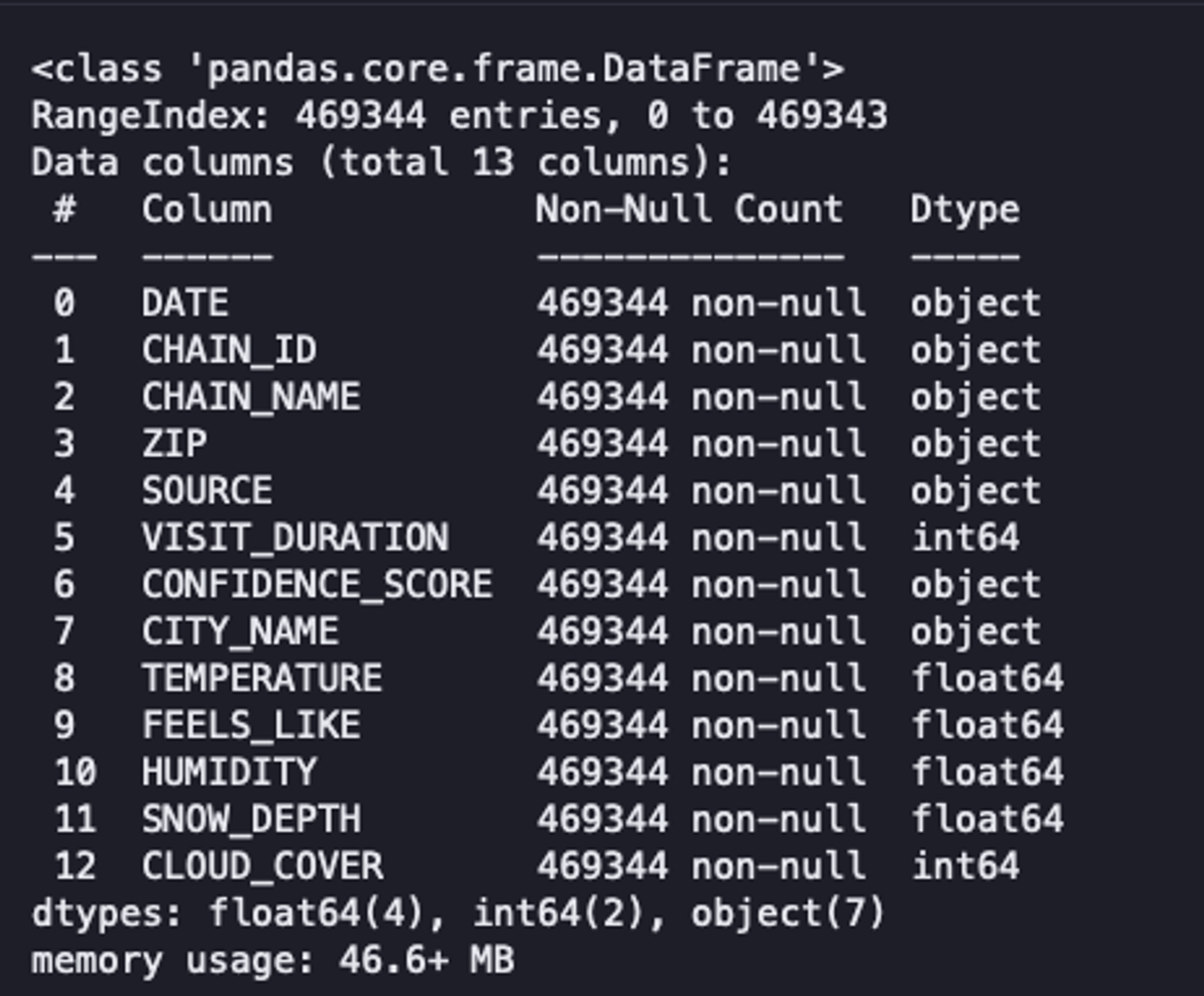

Once the dataset is loaded, you can take a glance at information about the data such as different column names, the shape of data, the data types of each column, and several non-null values using the info() method from Pandas.

data.info()

As you can see, our data has 469,344 rows, 13 columns, no null values, and a combination of data types. Although this method gives us an overview of data it does not help a lot to make informed decisions.

Descriptive Statistics

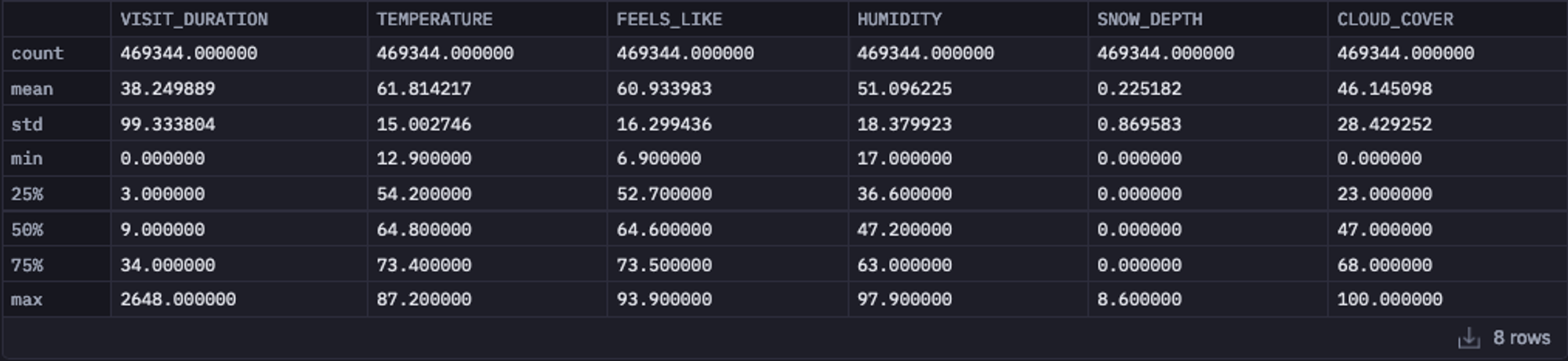

Next, you can check some of the statistical values such as mean, median, max, min, standard deviation, and IQR for the numerical features using the describe() method of Pandas.

data.describe()

Analysts can quickly detect outliers, evaluate data dispersion, and comprehend the overall trend of the collection by using these summary statistics. Descriptive statistics serve as a starting point for deeper analysis, helping to form hypotheses, guide further exploration, and communicate key insights to stakeholders.

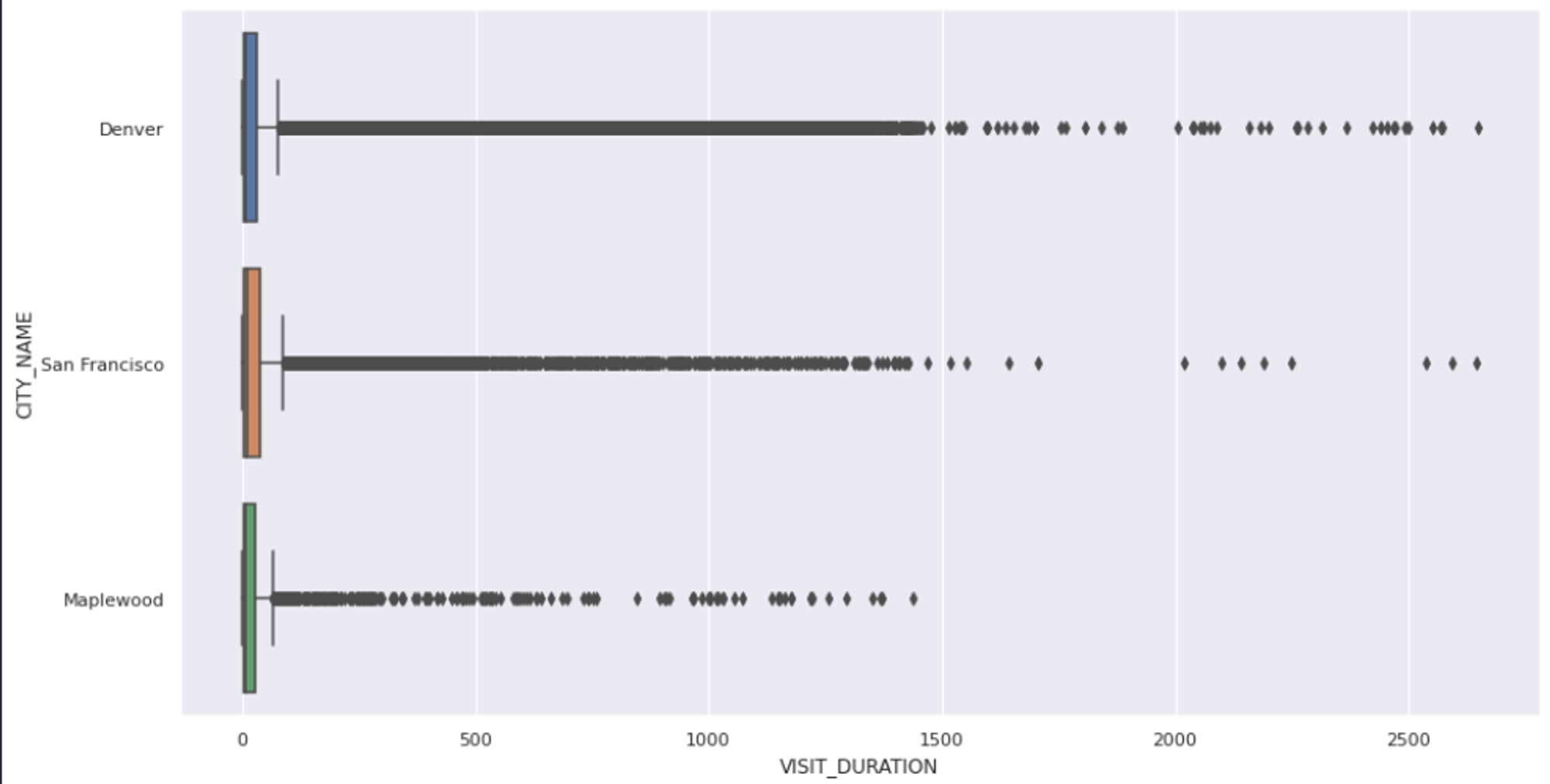

For example, if you check the VISIT_DURATION and the SNOW_DEPTH columns, you will find that the mean value (center of the distribution) and the standard deviation (dispersion of the values) are quite low as compared to the max value of these columns. Moreover, the maximum VISIT_DURATION is 85 times greater than the VISIT_DURATION in the third quartile. When the data are organized in ascending order, the third quartile indicates the value below which 75% of the data can be located. This indicates that, out of all visitors, 75% spend little more than 34 minutes at their preferred location. Subsequently, the figure abruptly increases to 2,648 minutes. This gives us a hint about the presence of outliers in these columns.

Data Cleaning

Next comes the data cleaning stage where we process the data to remove outliers, missing values, redundant data, and standardize the data to bring feature values to the same scale.

Dealing with Outliers

Outliers can ruin your entire statistical analysis if not handled properly. These outliers can be the result of data entry errors, sampling problems, or natural variation. The two most common methods of identifying outliers are IQR (already seen with the describe() method) and Z Score. Once identified you need to handle them properly. It is quite prominent to have the domain knowledge as sometimes these outliers may convey some crucial information and by removing them you could possibly lose it.

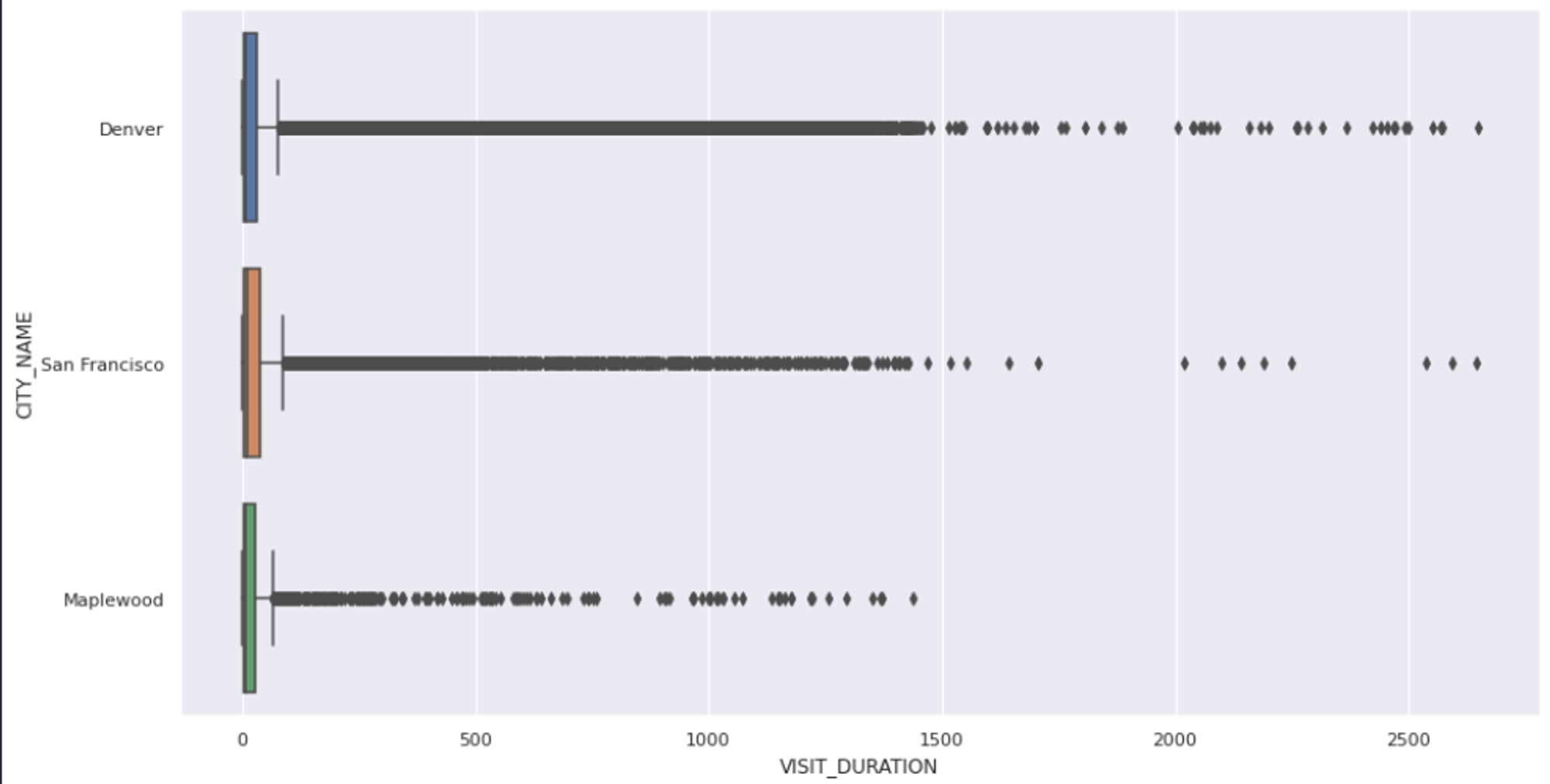

The first thing to handle the outliers is visualizing the features that might have them. This can give you an overall picture of the feature and its values. The most common way to visualize the outliers is using the box plots. You can use the boxplot() method from Seaborn to create a chart between VISIT_DURATION and CITY_NAME columns as follows:

sns.set(rc = {'figure.figsize':(15,8)})

sns.boxplot(x = data['VISIT_DURATION'], y = data['CITY_NAME']);

As you can see, the above graph confirms the presence of outliers but it does not specifically tell the exact values of these outliers. So we’ll go ahead and use the IQR approach to remove all the values that fall outside the upper (75) and lower (25) bounds of our results.

def outliers(values):

q1, q3 = np.percentile(values, [25, 75])

iqr = q3 - q1

lower = q1 - (1.5 * iqr)

upper = q3 + (1.5 * iqr)

return lower, upper

# calculate the lower and upper outlier limit

lower, upper = outliers(data['VISIT_DURATION'])

# create filters that remove all rows that fall outside of these limits

lower_mask = (data['VISIT_DURATION'] > lower)

upper_mask = (data['VISIT_DURATION'] < upper)

# apply the filters to the dataset

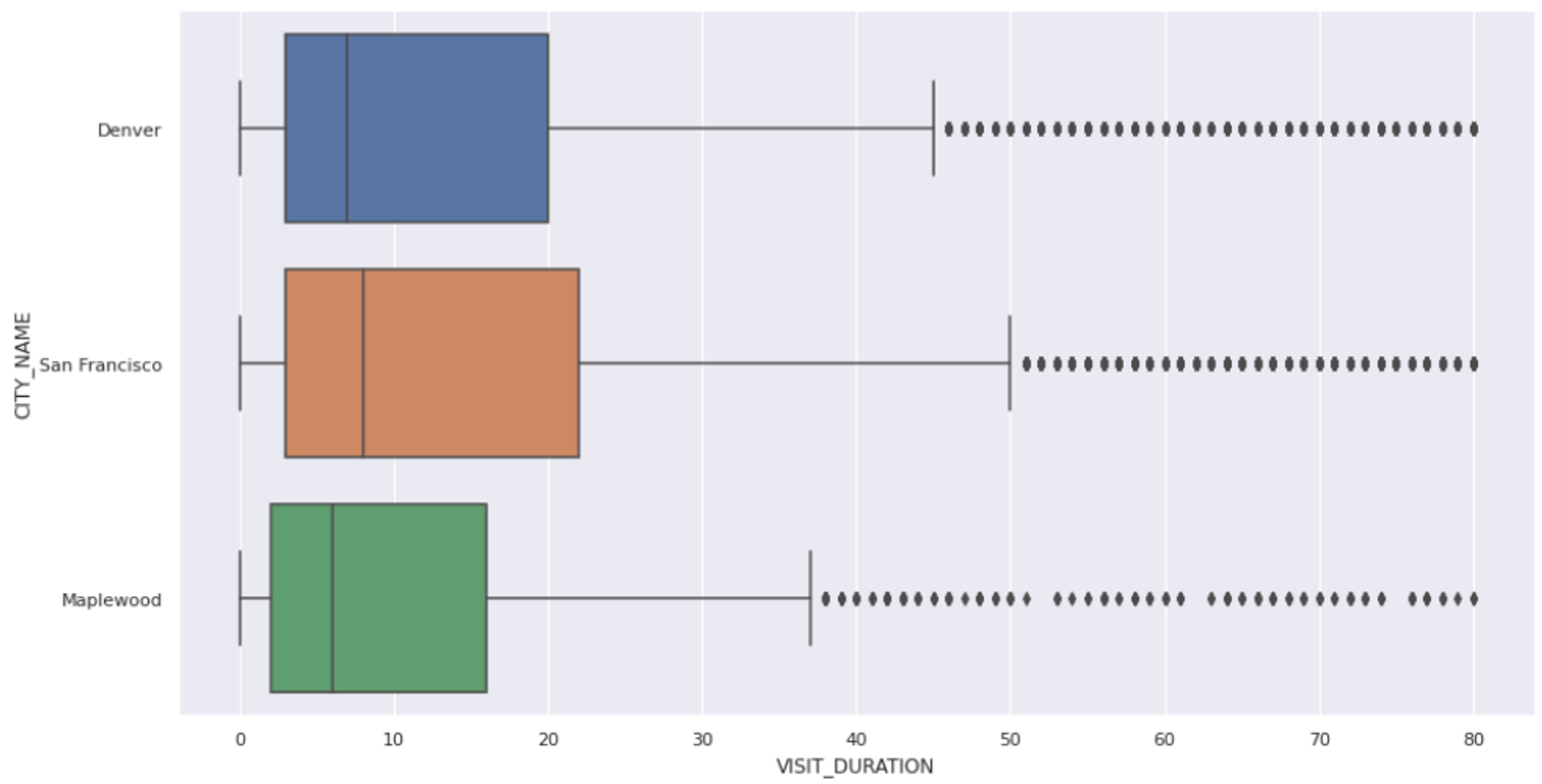

cleaned_data = data[lower_mask & upper_mask]As you can see in the above code, we identify the lower and upper bound of the values using the outliers() method and then filter all the values that are past this range. Finally, when you plot the graph of the filtered data, you will see that there is no outlier present.

sns.boxplot(x = cleaned_data['VISIT_DURATION'], y = cleaned_data['CITY_NAME']);

Handling Missing Values

When dealing with real-world data, it is quite common to have missing data (NaN values) in the dataset. These NaN values affect the reliability of analysis and modeling. There are several ways to handle these missing values, three of the widely used ones are:

Dropping the rows from the dataset that contain NaN values. This is not a good approach as most of the time we tend to lose the important information that could be crucial for analysis.

Replacing the NaN values with the measures of central tendency (mean, median, and mode). However, this approach assumes that the missing values are missing at random.

Finally, use the machine learning algorithms to predict the missing values using the rest of the features in the dataset.

Our current dataset does not contain any missing values so we need not take any action on this part.

Data normalization and standardization

Another common problem of the real-world dataset is that sometimes feature values do not share the same scale. This can be due to the wrong measurement, different measurement units for the same feature, nature of features, etc. When the features in the dataset have different scales, those with larger magnitudes may dominate the learning process. It causes the ML models to converge slower as compared to the same scale features.

The solution to this problem is standardizing the values in a feature to bring them all to the same scale. The most common approaches are Min Max Scaling and Standard Scaling. You can learn more about them here. As our data is in good shape, we need not apply any external scaling to it.

Often you have duplicate rows in your data, you need to get rid of these duplicate rows to ensure the data integrity.

Feature Engineering

In real-world scenarios, sometimes your data can have a lot of features (thousands of features), sometimes it will have a very small number of features, and finally, it can have just the right amount of features. In any case, there might be a need to manipulate your dataset to remove the existing features, create new features from the existing ones, and combine different features. This process of manipulating the data is known as feature engineering. The need for feature engineering differs from use case to use case but there is a high possibility that your dataset will require feature engineering in one way or the other.

Some of the common feature engineering operations are, creating new features, reducing the number of features using dimensionality reduction techniques such as PCA, and handling the categorical variables for effective ML and deep learning modeling.

For our dataset, the number of features looks quite nominal but we will still create a feature i.e. coordinates (lat and long) for each venue to help us plot the values on the map. Now you might have guessed why exactly you need the pgeocode library of Python. Let’s create a Python method that accepts a dataframe and based on the zip code (postal code) it returns the latitude and longitude information.

def add_coordinates(frame):

'''

Accepts a dataframe and returns it with the new columns lat and lng

'''

# create geocoding object

nomi = pgeocode.Nominatim('us')

frame = frame.copy()

# list of each unique zip code

unique_zips = frame['ZIP'].unique().tolist()

coordinates = {}

# for each unique zip code

for zipcode in unique_zips:

# get the location data

location = nomi.query_postal_code(zipcode)

# extract and add the coordinates to our coordinate dictionary

coordinates.setdefault(zipcode, {'lat':location.latitude, 'lng':location.longitude})

# add the coordinates to the dataframe

frame['lat'] = frame['ZIP'].apply(lambda row: coordinates[row]['lat'])

frame['lng'] = frame['ZIP'].apply(lambda row: coordinates[row]['lng])

return frame, coordinates

cleaned_data, coordinates = add_coordinates(cleaned_data)

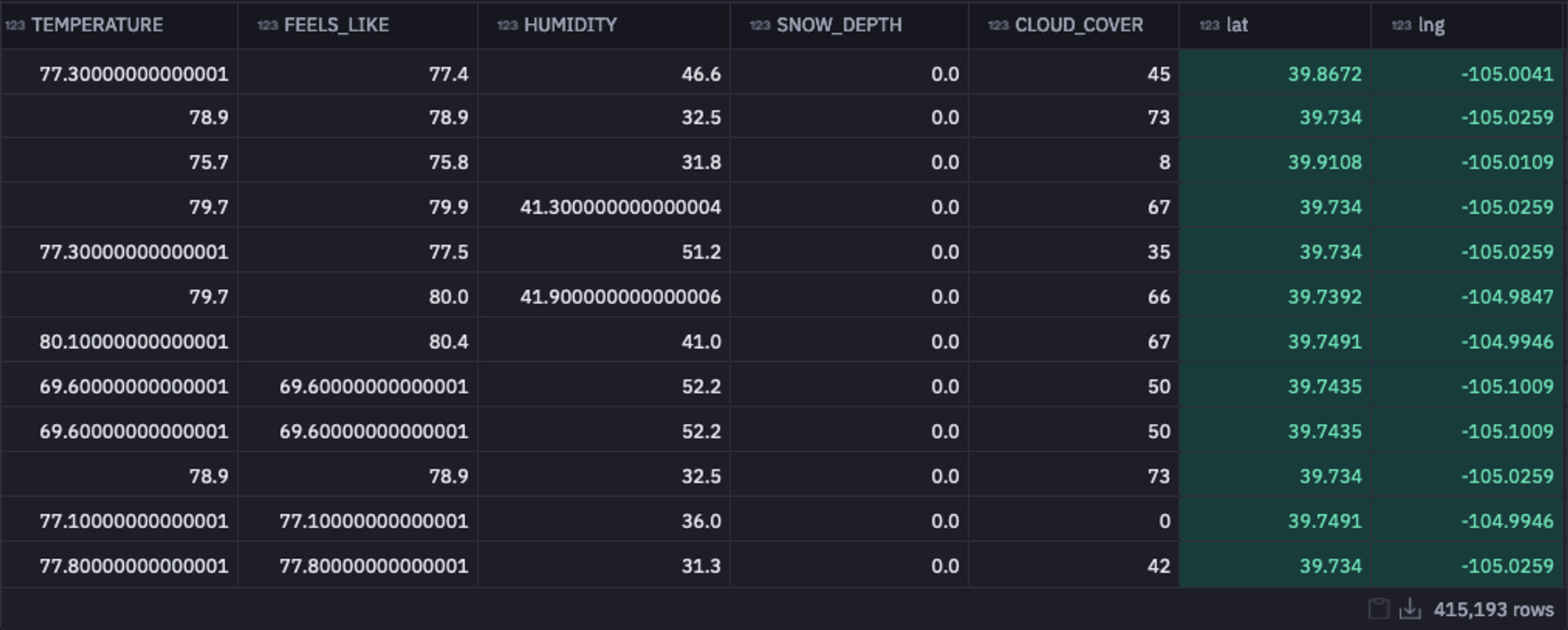

As you can see in the above image, the latitude and longitude information for all postal codes is added to the dataframe. With this, we are all ready to proceed to the most important stage of EDA i.e. answering the most important business questions with the help of different charts and graphs.

Visual Question Answering

The process of analyzing the data or visual question answering has different types of analysis that can help you answer different questions related to data. These analyses can be broadly classified into three categories:

Univariate Analysis: This type of analysis deals with analyzing a single feature at a time and reveals all the descriptive properties of that feature. The data types that we deal with under univariate analysis are numeric, categorical ordered, and categorical unordered. The most common plots and graphs used for univariate analysis are Bar charts, histograms, and pie charts.

Bivariate Analysis: Bivariate analysis helps you explore the relationship between two features in a dataset. This analysis aims to analyze the numeric-numeric, numeric-categorical, and categorical-categorical features relationships in the dataset. scatter plots, histograms, box plots, heat maps, and bar graphs are the most common graphs used for bivariate analysis.

Multivariate Analysis: This type of analysis aims to identify and recognize the patterns in a set of features. Heatmaps and Correlation matrices are popular analysis techniques used for this multivariate analysis.

Now that you have an idea about the different categories of visualization, let’s perform the visual question answering on our dataset. We will try to answer some important business questions such as What are the most popular venues?, How does humidity affect visit times?, and Which popular venues do people stay at the longest?.

What are the Most Popular Venues?

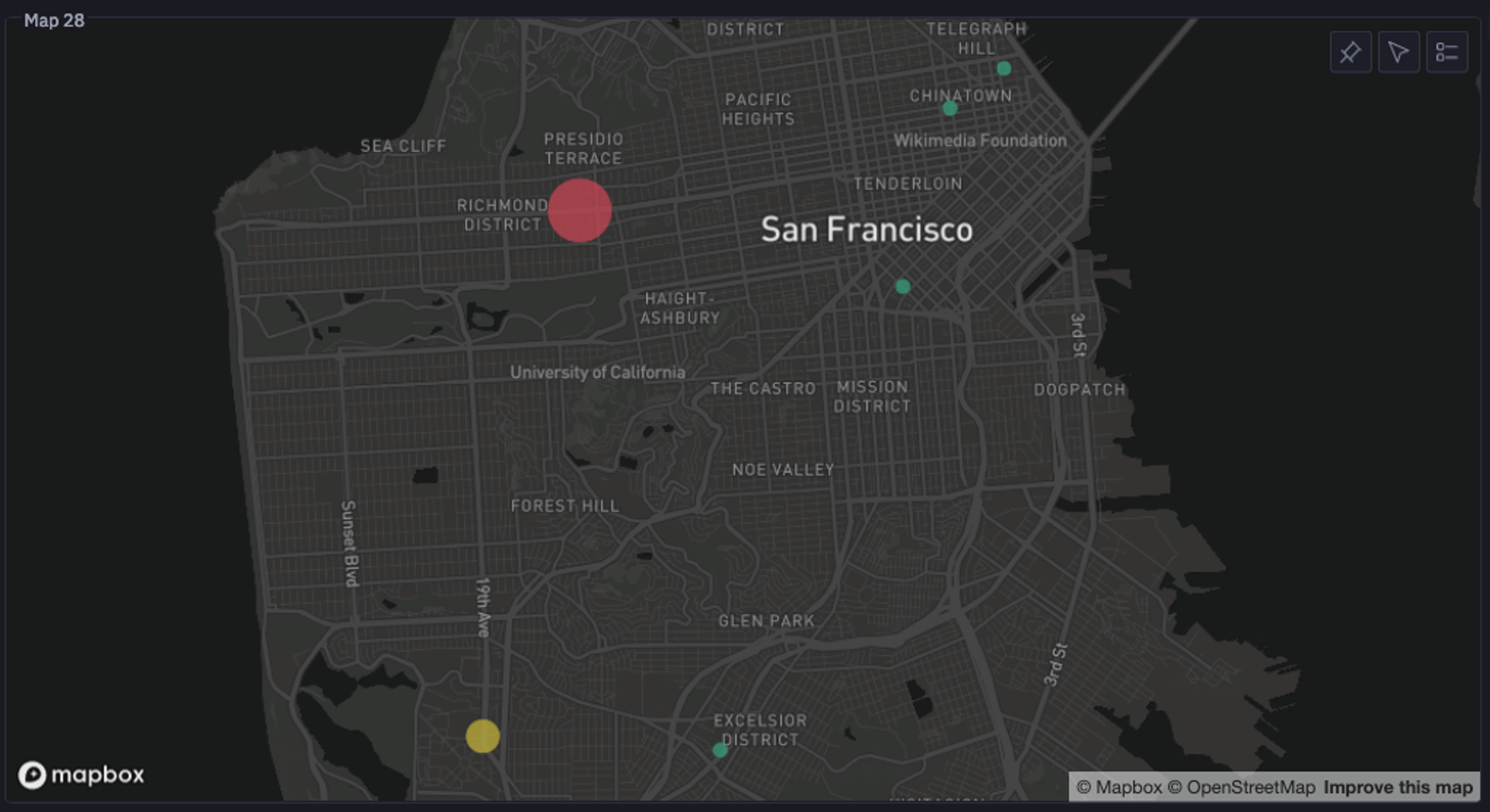

Let’s start with the first one. As a user, if I am visiting a city, I would like to know the most popular restaurants there to make an informed decision. So to answer this question we will identify which zip codes are the most popular for a venue of choice. To do so, let’s first filter out the data based on the city level and then on the venue level.

city_df = cleaned_data[cleaned_data['CITY_NAME'] == city_filter]

venue_df = city_df[city_df['CHAIN_NAME'] == venue]Then we will group the filtered data by zip code to determine the most popular venue location.

def coordinate_mapping(zip_code):

coordinates = venue_df[venue_df['ZIP'] == str(zip_code)][['lat', 'lng']].iloc[0].to_list()

return coordinates

# group by zip code to determine the most popular venue location

venue_grouped = venue_df.groupby(['ZIP', 'CHAIN_NAME']).agg({'VISIT_DURATION':'sum', 'DATE': 'count'}).reset_index()

venue_grouped.rename({'DATE':'total_visits', "VISIT_DURATION":"visit_duration_sum"}, axis = 1, inplace = True)

venue_grouped['coordinates'] = venue_grouped['ZIP'].apply(lambda row: coordinate_mapping(row))

most_popular_zip = venue_grouped.sort_values(by = 'visit_duration_sum', ascending = False)['ZIP'].iloc[0]

total_visits = venue_grouped.sort_values(by = 'total_visits', ascending = False)['total_visits'].iloc[0]In the above code, we get the coordinates of the most popular venues and Hex helps us plot them. You might now be able to see a graph like this that clearly states which zipcode is the home of the most popular venue in the selected city with the total number of visits.

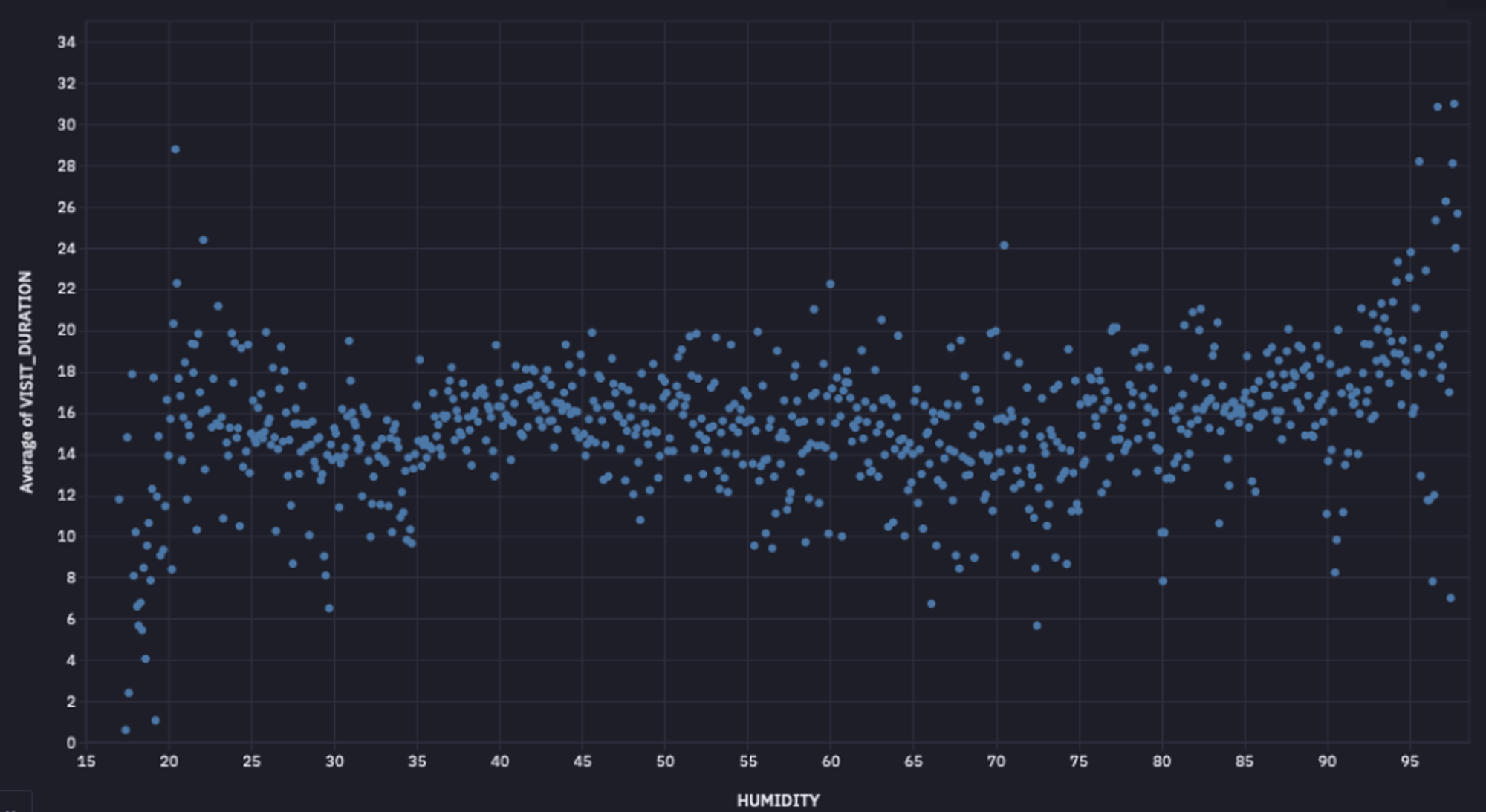

How does Humidity Affect Visit Times?

Ideally, the change in weather affects the restaurant visits. The higher the humidity, the higher the discomfort for people, so we could expect a pattern with a change in humidity. Since it is a bivariate analysis, we will create a scatter plot to identify the relation between humidity and the average amount of time people stay at a venue.

Hex allows you to create the charts without writing a single line of code. as you can see below:

As you can observe, there is no clear relationship between humidity and the number of visits as depicted by the graph. To verify our finding, we can also check the correlation between these two columns. To apply the Pearson correlation, you can use the pearsonr() method as follows:

corr, _ = pearsonr(cleaned_data['HUMIDITY'], cleaned_data['VISIT_DURATION'])The correlation value will come out as -0.035 which is a clear indicator that there is no direct relation between these two variables.

Similarly, you can also plot the graphs or calculate the correlation for other weather conditions and check how they affect the venue visits.

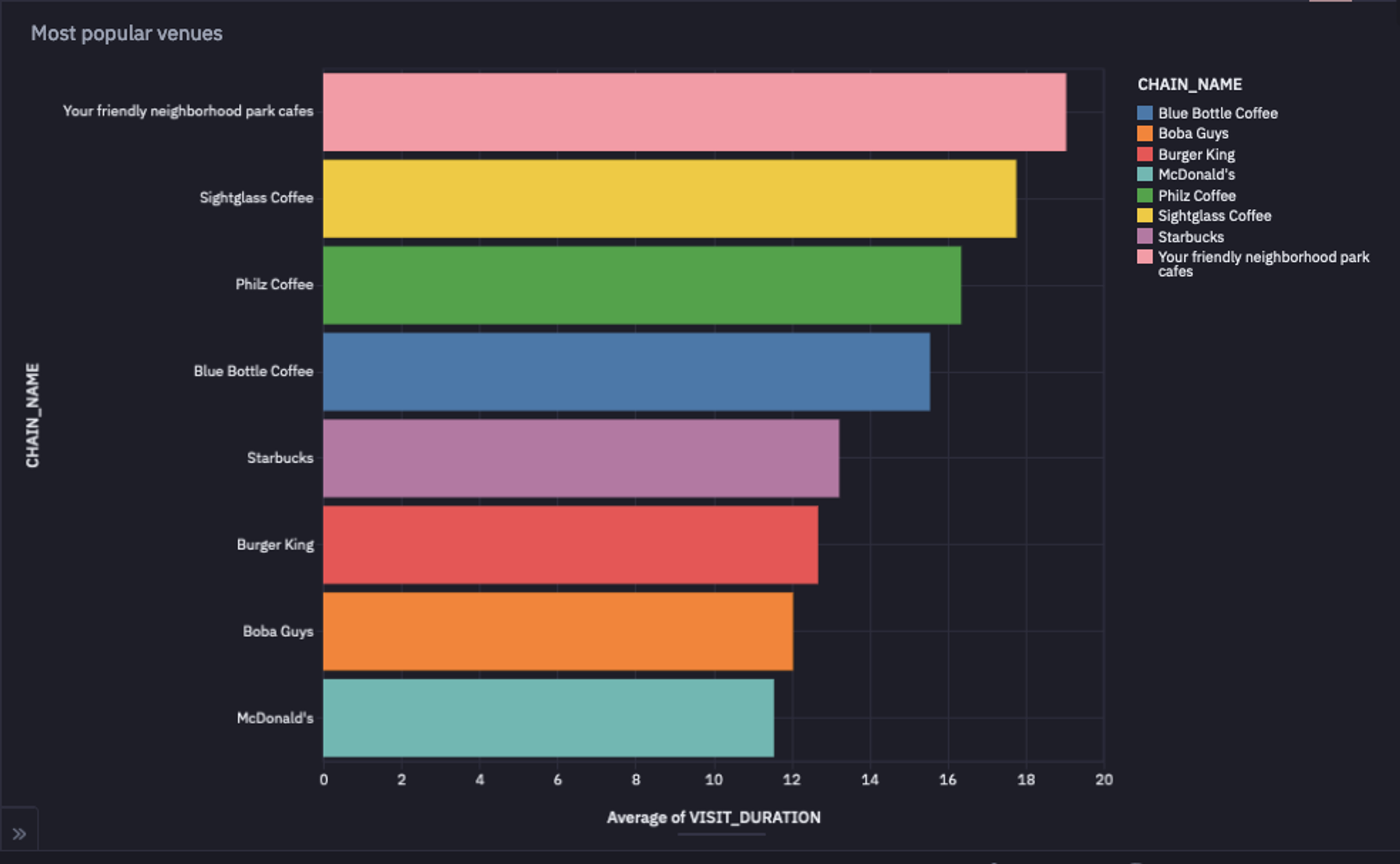

Which Popular Venues do People Stay at the Longest?

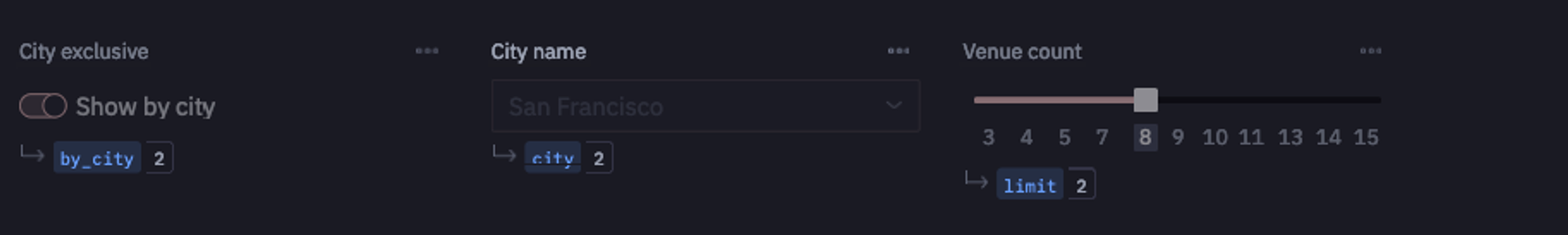

Finally, let’s answer our last question i.e. the most popular venues where people like to stay for the longest. You can use the built-in filtering feature of Hex to decide on the different parameters such as city and venue count.

Then you can write the logic to groupby the venues by CHAIN_NAME and identify the top venues based on the venue count parameter.

most_popular = (

cleaned_data.groupby("CHAIN_NAME")

.count()

.sort_values(by="DATE", ascending=False)

.reset_index()

.head(limit)['CHAIN_NAME'].tolist()

) if not by_city else (

cleaned_data[cleaned_data['CITY_NAME'] == city].groupby("CHAIN_NAME")

.count()

.sort_values(by="DATE", ascending=False)

.reset_index()

.head(limit)['CHAIN_NAME'].tolist()

)

top_ten = cleaned_data[cleaned_data['CHAIN_NAME'].isin(most_popular)]

As you can observe in the above image, Your Friendly Neighbourhood Park Cafes is the place where people like to stay the longest.

Learning EDA is a critical part of becoming a data analyst. It allows you to truly understand the data you are dealing with, which in turn gives you the ability to intuit about the data, build better models, and glean better insights. If you want to learn more about this topic, you can read this other Hex post on EDA.

See what else Hex can do

Discover how other data scientists and analysts use Hex for everything from dashboards to deep dives.

Ready to get started?

You can use Hex in two ways: our centrally-hosted Hex Cloud stack, or a private single-tenant VPC.